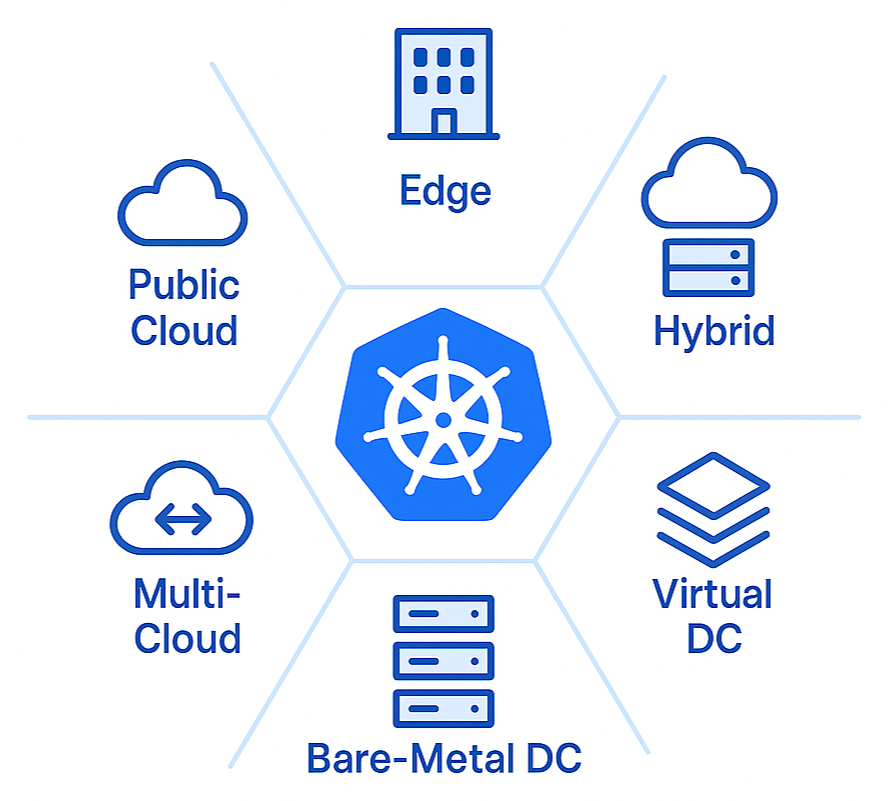

Edge, cloud, hybrid, virtual, bare‑metal, or multi-cloud? Deciding on your perfect Kubernetes architecture

From cloud to edge: making your choices

Working at Spectro Cloud has taught me that most K8s adopters don't live in a single infrastructure environment anymore. (And our research in the annual State of Production Kubernetes study absolutely proves the point).

Of course, your choice of which infrastructure environments you use has pros and cons, and it needs to match your organization's use cases and the teams within it.

And there are a lot of choices.

Cloud computing remains desirable for many organizations. I've worked at a few startups, and they often face a dilemma: cost savings versus initial hardware expenditure. Cloud services enable new companies to get going fast, without upfront hardware purchases, and can also provide impressive new technologies that companies want to use.

There are more choices than ever beyond the three main hyperscalers, including dedicated sovereign and GPU clouds. However, in any cloud service, costs can spiral without strict management.

If you have both on-prem and cloud environments, you may be considering repatriation for your specialist and intensive workloads, enabling a hybrid architecture. This allows you to keep predictable costs for your on-prem environment, while benefiting from the flexibility and elasticity of the cloud if you need it.

Companies in retail or telecommunications (and many other sectors) often require edge computing due to its ultra-low latency, resiliency, and data locality. A recent cyber attack on a UK retail store highlighted the importance of local device availability, enabling in-store purchases to continue despite online order disruption. Such resilience is crucial for protecting businesses during worst-case scenarios.

Bare-metal offers maximum performance and hardware-level control, plus potential license cost savings. However, it can often be challenging for companies to implement.

These are just some of the deployment options available, and deciding which one (or which ones) to use can be tricky without experience. Let's highlight some of the advantages and drawbacks of each infrastructure and the use cases for each one. You can then discover how to manage them all from a single platform.

Six Kubernetes deployment options at a glance

Kubernetes can run virtually anywhere, from cloud platforms to small edge devices. These are some of the most common infrastructure architectures.

Public cloud

Public cloud means running your Kubernetes nodes as VMs on a hyperscaler, whether you manage them yourself (on an IaaS service like EC2) or use a managed K8s service (like EKS, AKS or GKE) to handle the control plane. You get fast, on‑demand capacity and access to cloud‑native services, but you trade that convenience for tighter coupling to the provider’s APIs and pricing model.

What are the advantages?

Elastic, pay-as-you-go capacity

You can spin resources up or down in minutes, especially when paired to an autoscaler like Karpenter, matching cost to demand instead of having to procure and provision compute hardware in advance.

Breadth of managed services

Databases, AI/ML, security tooling, and hundreds of niche functions arrive “as a service”, letting you focus on product logic over infrastructure.

Operational offload

The provider patches, powers, and replaces hardware, reducing the burden on internal datacenter staff and enabling a largely software-defined cloud operations skill set.

What are the drawbacks?

Cost can climb sharply at scale

High sustained utilization, large data sets, or network egress often cost more than when using owned hardware. Organizations sometimes need complete data copies in multiple jurisdictions, increasing costs significantly.

Data-sovereignty & compliance hurdles

Regulations requiring data to remain within specific borders or under direct customer control can be tricky or impossible to satisfy in shared infrastructure.

Vendor lock-in risk

Proprietary APIs and integration with managed services can make migration expensive, slowing competitive negotiations or multi-cloud strategies.

Public cloud excels when you value agility, global reach, and a rich managed service catalog. Still, it introduces cost, control, and lock-in considerations that must be actively managed.

Edge

Edge means deploying clusters at or near the point where data is generated (e.g. stores, factories, telecommunication sites). Footprints may vary from micro-clusters behind a retail counter, to entire rack-scale stacks in a micro data center at an industrial plant. In most cases, the common goal is to run workloads locally that require low latency or are bandwidth-heavy. AI is also a key target for edge placement due to copilots and predictive maintenance driving heavier workloads on location.

What are the advantages?

Ultra-low latency

Processing data metres from the source slashes round-trip times, enabling real-time control for use cases like retail stores, traffic management, and robotics.

Data-sovereignty & privacy compliance

Sensitive information can stay inside a plant, city, or retail outlet, helping meet regional residency rules and easing concerns over transmitting raw personal data to public clouds.

Operational resilience

Local compute keeps critical functions alive through back-haul outages or cloud incidents; stores keep selling, and factory lines keep running even if the uplink is down.

What are the drawbacks?

Operational complexity scales with site count

Hundreds or thousands of micro-sites mean patching, monitoring, and securing many endpoints. Field engineering visits may often be required, and shipping and replacing hardware can be challenging.

Fragmented standards & tooling

Diverse hardware architectures, networking stacks, and orchestration frameworks make application portability and lifecycle management harder.

Security surface expansion

Remote boxes increase physical and cyber-attack risks that may require hardening measures that are costly and complex, such as zero-trust networking and hardware security modules.

Edge excels when milliseconds matter, data volumes are enormous, or legal/operational realities require keeping processing close to the source. However, those benefits arrive with a tax: more nodes to harden, patch, and power, plus higher capital expenditure and tooling overhead.

Virtual data center

Clusters in a virtual data center consist of nodes that operate as virtual machines (VMs) on a private or dedicated virtualization platform like VMware vSphere, OpenStack, or Nutanix. You maintain administrative control, regardless of whether the hypervisor is on-premises, in a colocation facility, or a hosted private cloud.

What are the advantages?

Familiar tooling

Most organizations are very familiar with managing hypervisors and their network and storage constructs, after decades of VM usage. Running K8s on top feels relatively straightforward.

Template‑driven node scaling

VM templates let Kubernetes autoscalers spin up or retire worker nodes in minutes, delivering near-cloud elasticity.

Built‑in HA and live‑migration for nodes

Tools like vSphere HA in virtualized datacenters automatically restart or live migrate Kubernetes VMs during node failures or maintenance, ensuring high availability.

What are the drawbacks?

Drawback

Potential impact

Elasticity is limited to the reserved capacity

Whether you own or rent, scaling beyond your committed pool requires a change order or new contract, so accommodating unexpected bursts can be slower (and costlier) than in a true pay-as-you-go cloud.

You pay for idle resources

Because capacity is pre-allocated, underutilized VMs still incur full monthly charges to colo providers, lessening the "cost per use" benefit of cloud vendors.

Provider and platform lock-in risk

Heavy reliance on a specific virtualization stack and proprietary management portals can make multi-cloud strategies or future migrations expensive and complex.

A virtual data center provides a balanced approach between on-premises bare metal hardware and hyperscale cloud solutions. It offers familiar tools, a degree of elasticity, and built‑in node resilience, making it ideal for stable enterprise applications prioritizing VM parity and compliance. Before committing, you should consider the potential idle-capacity expenses, a more limited service catalog, and vendor lock-in.

Bare metal data center

Bare metal means your Kubernetes nodes run straight on physical servers. No hypervisor, just raw silicon. You gain a degree of performance and full OS‑level control, and possibly some license cost savings — but you also inherit the hands‑on hardware work and slower, rack‑based scaling that come with it.

What are the advantages?

Maximum, predictable performance

Applications run directly on the hardware with zero hypervisor overhead, giving consistent CPU, memory, and I/O throughput. This is vital for latency-sensitive trading systems, real-time analytics, or high-performance computing (including AI).

Full hardware customization

You can use high-clock CPUs, large RAM footprints, NVMe fabrics, GPUs/FPGAs, or specialised network cards. These options may be unavailable or costly in virtual or public cloud tiers.

Deterministic cost model

Typically, pricing involves a fixed monthly maintenance cost, protecting budgets from fluctuating usage and network egress charges.

What are the drawbacks?

Limited elasticity & slower provisioning

Scaling bare metal Kubernetes means imaging each server, OS setup, and control plane bootstrapping, which increases lead time. As there is no cloud-style API for autoscaling, it may be necessary to overprovision resources upfront. However, tools like Canonical MAAS or Cluster API's MAAS provider (used by Spectro Cloud Palette) can automate much of that build process for you.

Under-utilization risk

Idle CPU and GPU cycles on physical servers incur sunk costs in hardware, power, cooling, and maintenance without contributing to productivity.

Operational burden

Bare metal requires hardware monitoring, firmware updates, disk swaps, and physical security. This necessitates specialized staff and processes, or outsourcing at added cost and vendor-lock-in risk. Unlike cloud or hypervisor solutions, these tasks persist.

Choosing bare metal for Kubernetes allows you to select the precise OS, hardening frameworks, and access policies for each node. This level of control is not available when a third party manages the hypervisor. Additionally, running directly on the hardware eliminates an entire layer of software to purchase, patch, and learn. However, this approach requires you to handle all hardware maintenance and provisioning yourself.

Multicloud

Deploying on multiple clouds means running separate clusters on different cloud providers to meet redundancy, performance, or regulatory needs. This is not the same as stretching a single Kubernetes control plane across multiple providers, which can introduce serious latency issues, amongst other concerns.

Multicloud has become the primary driver of K8s workload placement according to our 2025 research.

What are the advantages?

Negotiation leverage & cost optimization

Distributing spending across providers enables price comparison and workload migration to the most cost-effective platform. This lessens dependence on a single proprietary stack, reducing switching costs, simplifying exit strategies, and increasing architectural flexibility.

Improved resilience & DR posture

Running active and standby instances in different clouds protects against regional or provider-wide outages that could take cloud estates offline.

Broader geographic & regulatory coverage

Multicloud enables compliance with latency targets and data-residency mandates.

What are the drawbacks?

Operational and architectural complexity

Clouds vary in consoles, SDKs, IAM, and billing, increasing engineering overhead for pipeline parity, observability, and security. Teams must learn multiple ecosystems, slowing onboarding and diluting focused expertise.

Higher tooling and network costs

Cross-cloud traffic incurs egress charges, and solutions for identity, networking, and monitoring increase license and run-time expenses.

Governance & compliance sprawl

More accounts, policies, and audit trails increase the attack surface and the burden on security teams to enforce sufficient policies.

Multicloud offers leverage, resilience, and service choice, but those benefits come with a complexity premium. It requires a willingness to invest in cross-cloud automation, unified policy frameworks, and a skilled workforce proficient in operating multiple platforms. Deploying in one cloud doesn't mean you can copy-and-paste to another. They often require complete reworks due to minor architectural differences (such as accessing databases like SQL).

Hybrid

A hybrid environment extends a single Kubernetes control plane across on-prem and cloud nodes. It’s typically used to keep latency-sensitive or regulated data on-site while bursting to the cloud for extra capacity under one set of policies, networking, and observability tools. EKS Hybrid Nodes is a good example of this.

What are the advantages?

Lower run-rate for predictable workloads

For I/O-intensive applications, on-premises resources can be more cost-effective. This can also support cloud repatriation efforts, as migrating such applications from public clouds has been shown to reduce infrastructure costs by as much as 50 percent.

Performance and latency control

Hosting data-heavy or latency-sensitive services on dedicated hardware close to users removes lengthy network round-trips that can occur with public cloud deployments.

Easier compliance & data sovereignty

Keeping specific datasets within required jurisdictions satisfies residency regulations that shared cloud infrastructure may struggle to meet.

What are the drawbacks?

Repatriation projects can be costly

Pulling data back is as resource-intensive as the original migration, requiring discovery, dependency mapping, and cut-over testing before a single byte moves.

Operational complexity rises

A hybrid ecosystem means monitoring, patching, and securing two (or more) environments, and requires operational experience in all of them.

Elasticity gaps for bursty workloads

Unless capacity is over-provisioned, on-prem tiers cannot scale on demand like cloud instances, so spiky workloads may still incur cloud costs or face throttling if on-prem sizing is off.

Pulling sensitive workloads back on-prem can deliver sizeable cost savings, tighter performance, and easier compliance, provided you’re ready to fund the facilities and shoulder the extra operational complexity.

What is everyone choosing?

Working with our customers at Spectro Cloud, we have found that it is rare for them to settle on a single home for their Kubernetes clusters. Most spread clusters across an average of 5–6 different infrastructure environments. That number has jumped from 3.6 on average from last year in 2024, and every new environment correlates with higher cluster and node counts.

When we asked what was driving this expansion, three motives stood out:

- Minimizing cloud risk with a multi-cloud strategy (60%)

- Repatriating cloud workloads to on-prem for control and cost control (56%)

- Finding the right technologies and pricing to support my AI initiatives (56%)

From this, we can gather that the modern Kubernetes strategy is less about picking one infrastructure over another and more about mixing and matching placement to balance risks, costs, and the ever-growing AI demands.

What should you choose vs what can you choose?

In a perfect world, we can choose the architecture that suits our needs. In reality, critical factors outside your control can prevent this, such as:

- Inherited hardware that you can't afford to scrap or not use.

- Technical expertise within your organization.

- Network or regulatory requirements that prevent you from using certain platforms.

- Fragmented operations across diverse geographies or business units, each with their own constraints and preferences.

- Financial and procurement pressures that impact operational expenditure.

Often, the answer to what you can choose comes down to what you can manage. And this is where we can help. One of the things our customers value the most is that Palette can be used as a universal control plane for all their Kubernetes environments. Palette gives them a single pane of glass to deploy, observe, and govern every cluster without having to log into N+ dashboards. There are also tools to help with consistent configuration, cost management, unified Role-Based Access Management (RBAC), and much more. If you’d like to get a demo, you know where to go.

.avif)

.png)