Enterprise AI in 2026: Sovereign, agentic, edge and AI factories

2025 was, to put it lightly, a busy year for us, and everyone in the world of enterprise AI. And the pace shows no signs of slowing. If you’re trying to navigate the shifting sands, let us shed some light on the trends that really matter — without a single mention of AGI.

We see four key trends shaping the AI landscape as we look ahead into 2026:

- Sovereign AI investments will accelerate thanks to growing interest from governments, regulated industries and large enterprises, keen to retain control over data, models, and infrastructure and solve security, governance and compliance concerns.

- Agentic AI shows AI moving beyond generative AI chatbots, with enterprises aiming to have tasks executed autonomously. Innovations like MCP servers and A2A have quickly matured into common foundations to remove friction.

- Edge AI adoption continues as enterprises look to bring intelligence closer to where data is generated. Adopters and the vendors that serve them still need to address low latency expectations, limited bandwidth and privacy concerns.

- Lastly, AI factories are on the radar, bringing an industrial mindset to developing and running AI at scale, through purpose-built platforms that accelerate all stages of the AI workflow.

Together, these forces signal a broader shift. Before, AI was a feature. Now it is a distributed, security-critical system. The implications are profound.

Sovereign AI: why enterprises and governments are reshaping AI control

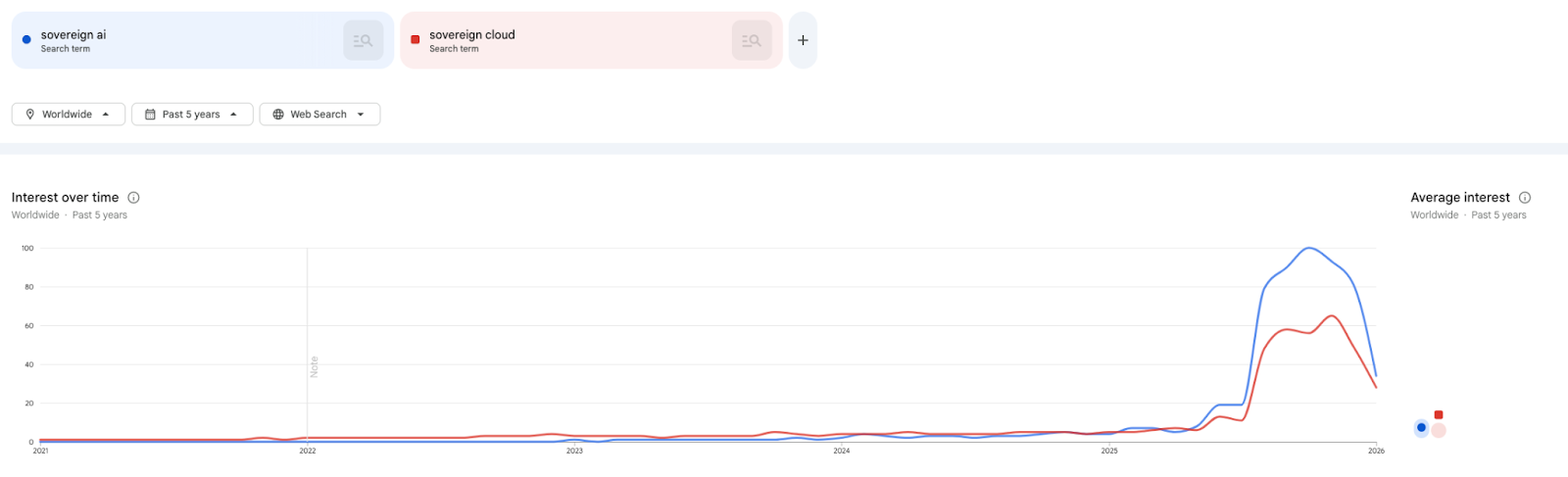

Starting in 2025, there was a huge push from large enterprises, regulated industries and global governments to invest in sovereign clouds and sovereign AI. Gartner predicted that 65% of governments will introduce technological sovereignty requirements by 2028.

Sovereign clouds are needed not only for data residency. They reduce dependence on foreign technology providers, which is often a matter of national policy, and sensible risk management. They also enable AI systems to align with local regulations. Organizations can expect to improve resilience by owning more of the AI stack beyond bare metal. It goes all the way up to platforms and operations. Such architecture fosters internal innovation and easier knowledge transfer.

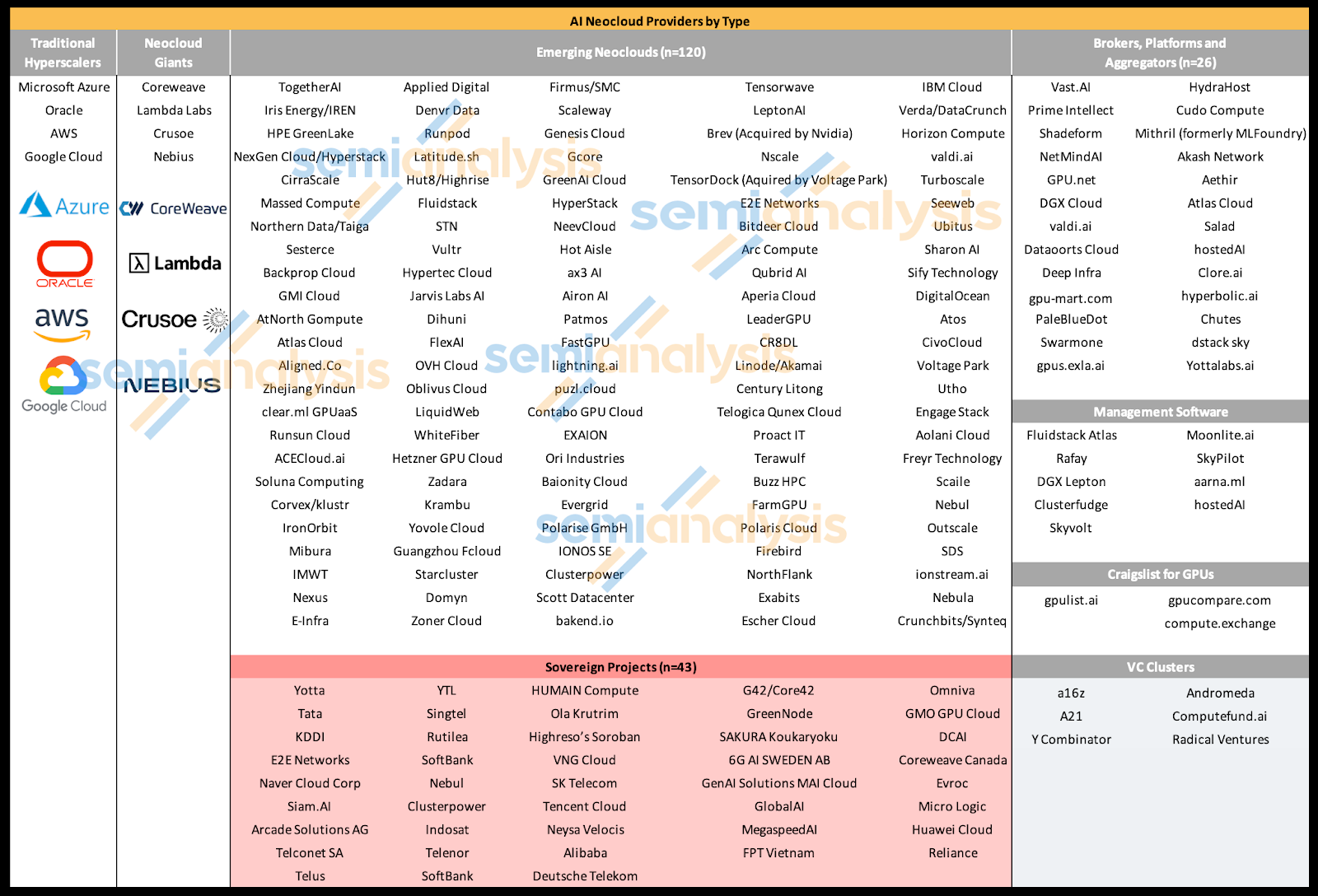

The result is that the cloud landscape is no longer quite so dominated by the three hyperscalers. It has fragmented with the expansion of more neoclouds such as NScale, Nebius, and Lambda — and many more, as the Clustermax listing from Semianalysis shows.

Many large enterprises and governments are also investing in breaking ground on their own clouds and AI data center projects, such as SAP’s EU AI Cloud, a vision for European digital sovereignty.

Of course, the hyperscalers are not sitting idle. Just this month AWS announced its European Sovereign Cloud, and no doubt more will follow.

What it takes to make sovereign AI happen

Reading the press releases, the rise of sovereign cloud and AI seems quick and even easy. But in reality sovereign AI requires vast amounts of computing resources. Cloud builders (whether they’re a government, service provider or private enterprise) need to gain access to scarce and expensive hardware, which means raising a huge amount of capital or negotiating complex deals with partners. They need to find appropriate sites and power and human talent to build and operate modern AI-ready facilities. Only a limited number of organizations will have the resources and ambition to forge ahead on their own.

And the hard work isn’t over once the ribbon is cut. Sovereign environments need to be operationally reliable and meet the high expectations for performance, scalability, governance and security that customers have grown accustomed to from the traditional hyperscalers (which have had 20 years to get it right). This is especially challenging when economies of scale and interoperability are hindered by the need to segregate operations to jurisdictional boundaries.

Despite these headwinds, 2026 is very likely the year of sovereign AI. We predict that many enterprises will move their AI workloads into such an environment, particularly in regulated industries like healthcare, financial services and defense.

And the knock-on effects will be surprisingly wideranging. Sovereignty goes well beyond a one-time infrastructure decision: it spans infrastructure, security, governance, and lifecycle management, hiring policies, supply chains, service contracts, partnerships.

Agentic AI: from answers to autonomous actions

With agentic, AI is now moving beyond the generative chatbot era into systems that plan and execute — often across multiple tools, apps, and workflows.

You don’t have to look far for eyebrow-raising statistics about enterprise agentic adoption. By some measures, half of enterprises are already running agents in production or pilots, with more following.

The allure is obvious. AI agents were initially meant to reduce repetitive and manual tasks, improving process performance at lower cost in business domains like customer service, supply chain and HR. In IT operations, agents can make configuration changes in response to events like troubleshooting messages. According to IDC, over 40% of enterprise applications will benefit from agentic automation.

Whatever the process, agents can automate actions quickly across different systems and operate continuously, without the variability of human workers. With rote tasks delegated to agents, human teams can be more productive and (as the story usually goes) more strategic.

The risks of autonomous decision-making at scale

The challenges are also obvious. Agents that actually make decisions and complete actions — autonomously, at speed and scale — open the business to wide-ranging risks. Imagine the scenarios:

- A supply chain agent cancels hundreds of stock orders incorrectly due to a misconfigured rule

- An HR agent illegally discriminates against thousands of applicants automatically thanks to systemic biases in training data

- A marketing agent spends millions on incorrect advertising due to a change in bidding systems

- A customer support agent approves fraudulent refunds after being gamed by scammers.

Without extreme care over workflow design, data sources, error handling and oversight, there’s a lot that can go wrong. There’s also the risk of ‘agent sprawl’, as different teams across the business build or buy agents for specific, siloed processes. As these agents interact, unintended consequences could proliferate unless strong observability and governance are in place.

From a technical perspective, agents have identity, privileges, and access to systems and data across the business and out into the extended supply chain, either directly or through interfacing with other agents indirectly. This makes them a new and unexplored security risk — an entire new non-deterministic attack surface for hackers to exploit. Undoubtedly we will see agent-involved security breaches this year.

Luckily, the industry is working rapidly to mature agentic AI, with organizations such as the Agentic AI Foundation leading the way, providing stewardship for emerging standards such as the Model Context Protocol (MCP). MCP enables agents to interact with tools and data sources through standardised, well-defined interfaces — a vital step towards portability, security, and observability.

Our prediction for 2026 is that agentic AI will be widely adopted but selectively trusted. The most successful implementations will emphasize orchestrated agents with clear guardrails, policy enforcement, and human-in-the-loop controls. Agents will stop being opaque actors and will not become fully autonomous. More likely, they will be dependable operators within governed platforms.

Edge AI: real-time inference where latency, bandwidth, and privacy matter

We’re fond of saying that edge is a natural home for AI workloads, particularly for inferencing workloads. The edge is where business happens! It’s where your customers are, your products are, your employees are: in factories, restaurants, vehicles, clinics, farms and streets. If you can sense what’s going on in these edge environments, and act on those events… well, the use cases are endless.

But when you’re applying a trained model to act at the edge, whether you’re supporting clinical decisions in healthcare, inspecting parts on a manufacturing line, or monitoring security video feeds in a retail store, you rapidly run into the laws of physics.

Often these workloads need to happen in real time: if you spot a flaw in a manufactured product, you need to stop the line immediately. If your AI is powering a self-driving car or a military drone, you can’t afford 300ms delays.

Factors such as latency, bandwidth constraints and ingress/egress costs mean it’s infeasible to perform your edge inference back in the cloud or data center — instead, the AI workload is best run right there at the edge.

This is especially true if you’re handling sensitive data like medical imaging and facial recognition, which is often subject to compliance and security requirements; it’s safer that it never leaves the device for processing.

Never underestimate the challenges of edge computing

Despite all the arguments for edge as the home of AI, the challenges are significant. Edge environments are inherently distributed and heterogeneous. Lifecycle management, device maintenance, observability and model updates are far more complex than in centralized clouds. Models must be optimized and tuned to meet the power constraints of edge devices.

Our deep research into the topic culminated in the State of Edge AI report, which is well worth a read if this topic is on your radar.

We found that most enterprises had only been working on their edge AI projects for two years or less, and only 11% had reached any kind of full production rollout. Put simply, teams lack the depth of experience today to overcome the many complexities of edge AI. But the growth opportunity is there, and in 2026 we expect a lot of progress.

We believe edge AI will shift towards managed fleets operated from one platform. Success will be defined by secure operations, policy-driven orchestration, and tight integration with central AI platforms. It transforms the edge from an experimental frontier into a dependable layer of the enterprise and sovereign AI stack.

AI factories: industrializing AI at enterprise scale

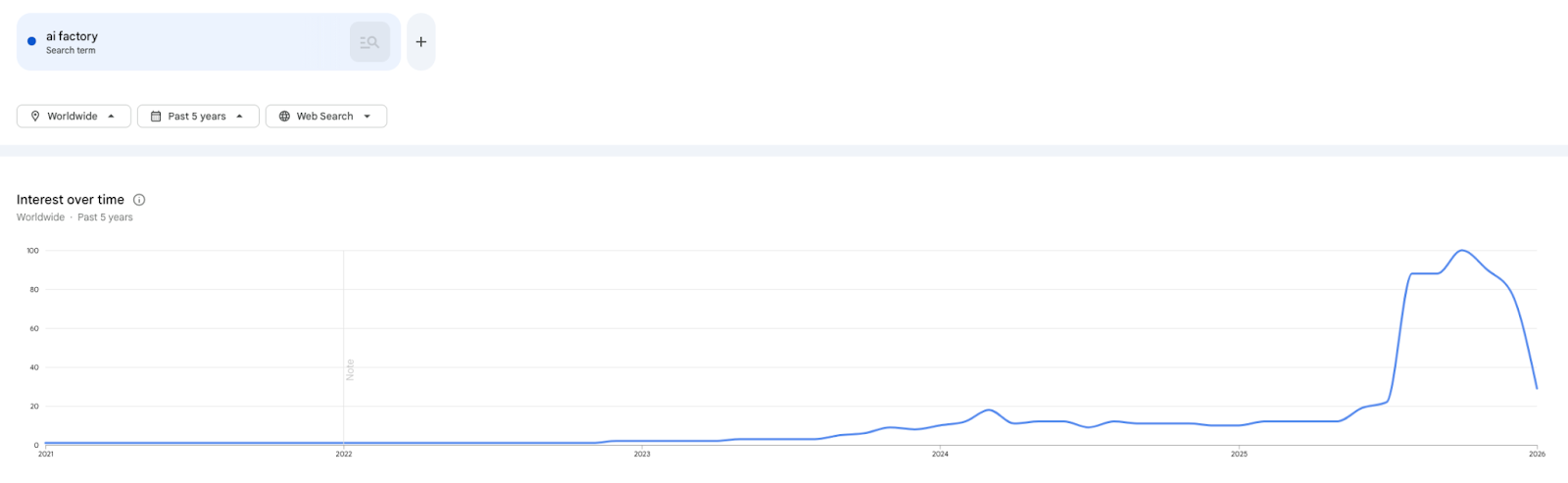

As enterprises mature and scale their AI efforts from experimentation to production, they naturally start to look for ways to industrialize how they operate, to benefit from improved efficiency and output. This is where the concept of AI factories came from. In the words of NVIDIA, an AI factory “manufactures intelligence”.

Leaving the high-falutin’ metaphors behind, the term ‘AI factory’ often refers to a large data center buildout packed with accelerated compute, networking and storage to enable AI workflows and lifecycles at scale: from ingesting raw data to machine learning tasks like training models, and supporting inferencing.

In this sense, AI factories are both a technical and operational concept. They are not just a big bit barn with a load of GPUs. They provide a blueprint that goes beyond the infrastructure to include all the processes and operations around it, and provide professionals with the necessary tooling to run different activities, from pre-processing to model optimization. They may include tooling to improve team collaboration, reducing repetitive work. Additionally, they provide platform teams standardized governance and easier maintenance. It leads to faster time to value and an ability to deliver AI capabilities reliably across teams, regions, and use cases.

Architecturally, AI factories aim to address some of the challenges that traditional data center designs have suffered from. For example, they emphasise high-performance storage (a so-called AI Data Platform) and networking to move large-volume training data around, so expensive and powerful GPUs aren’t sat idle waiting for their next job, blocked by network holdups. GPU underutilization is a huge issue in large AI infrastructures today: 75% of organisations utilise their GPU at less than 70% of their capacity according to Fujitsu.

Building the factory of the future

All of this sounds very appealing, and perhaps lends some credence to NVIDIA’s claim that “every enterprise needs one”. But the picture isn’t all rosy. AI factory hardware is specialized and complex: it’s expensive to buy and needs the assistance of specialist integrators to get up and running. To fully utilize all that investment at scale, software supply chains and operational practices must be highly optimized — otherwise humans become the bottleneck. With so much data in one place, and many teams collaborating on shared systems, it’s also vitally important to address security, observability and multitenancy setups.

Throughout 2026, we’ll see more large enterprises, and public sector bodies, invest in AI factories to achieve a competitive edge, recognizing their role as strategic assets.

Ironically, as hardware shortages continue to bite, the sheer utilization advantage of AI factories make them even more compelling.

We’ll see organizations being more creative in their sourcing strategies, looking at diverse hardware and even hybrid cloud options for some capacity and some use cases. In this case, the prime consideration becomes choosing an infrastructure-agnostic software platform and a repeatable platform approach to ensure predictable outcomes.

Read more about how we collaborate with NVIDIA to build AI factories

The year we take charge of our AI future

So, those are the four trends we see in enterprise AI in 2026. Of course, the mainstream headlines around AI center on bigger, more social issues: the environmental impact of giant data centers in our communities. The effect of AI agents on jobs, and chatbots on mental health. The treatment of intellectual property and private data in training. And ultimately, the questions around whether we’re in an AI bubble.

In reality, all four of the trends we discussed have a huge impact on how those broader issues will play out. If we as an industry find ways to prove the value of AI innovations, to handle data securely, to manage resources with effective utilization, to protect citizens with sovereignty — that will lead us to sustainable success. Over the past several years we’ve had our hype and our experimentation. 2026 is the year when we all turn our attention to results.

For our part at Spectro Cloud, we are playing a role in driving open standards for AI and the underlying cloud-native infrastructure, through our work in the CNCF and the new AAIF, as well as collaboration with partners such as NVIDIA.

In 2025 we launched PaletteAI, a powerful platform for enabling teams to deploy and manage their entire stack from metal to model. Through our early customers we have already seen that it supports the AI/ML experience, reducing friction between teams. The end result is faster time to production, higher utilization and stronger governance. We can’t wait to show you what we’ve got planned for 2026. Join us at NVIDIA’s GTC in San Jose this March and you might just get a sneak peek.

.avif)