AI at the edge isn’t easy — but it’s achievable

At KubeCon + CloudNativeCon Edge Day in Atlanta, Spectro Cloud CTO and co-founder Saad Malik took the stage to share lessons from years of customer experience and research into one of today’s biggest frontiers in Kubernetes — AI at the edge.

“The dream,” Malik began, “is you have a model, you’re able to make it into a container, deploy it to a device.”

He paused before adding with a grin, “It still sounds easy, but as you all know — it’s not.”

From talent shortages and compliance headaches to fast-evolving models and massive operational complexity, Malik’s short keynote walked the audience through what’s really happening as organizations push AI closer to where data is created.

The state of AI at the edge

Malik shared insights from Spectro Cloud’s forthcoming State of Edge AI report, which explores how organizations are adopting and operating AI in edge environments, providing a deep dive in the same vein as the State of Production Kubernetes research offers for the wider K8s landscape.

“Most organizations doing edge AI just started a couple of years ago, and only 11% of them have fully deployed an edge computing platform at scale,” he said. “Many CIOs expected they’d already be seeing ROI by now.”

So what’s holding them back? Malik summed it up in three words: budgets, skills, and inconsistency.

Beyond those, he added, there’s one challenge that’s uniquely pressing at the edge: security — not just in software, but physically.

“AI adds another element,” he explained. “Models themselves are complex and non-deterministic. That means new attack vectors — from prompt injections to manipulated computer vision systems. How do you protect against these things?”

The importance of consistency

Even when teams can secure their infrastructure, they still face operational complexity. Malik pointed to updates as a major source of pain.

“If it’s one environment, it’s easy. But as you go from hundreds to thousands to tens of thousands of locations — how do you provide updates consistently?”

He explained that while most organizations update infrastructure quarterly, models and applications change much faster.

“As soon as a model is deployed, it already starts to see drift,” Malik said. “The data changes, behavior shifts, and performance starts degrading.”

The more advanced organizations he’s seen are updating models weekly. And many of them — about 50% — use Kubernetes as their orchestration layer for these workloads.

Why Kubernetes — and where Spectro Cloud fits in

“Kubernetes ties in with the IT tools teams already use,” Malik noted. “They understand how it works and they see all the advantages that cloud-native brings.”

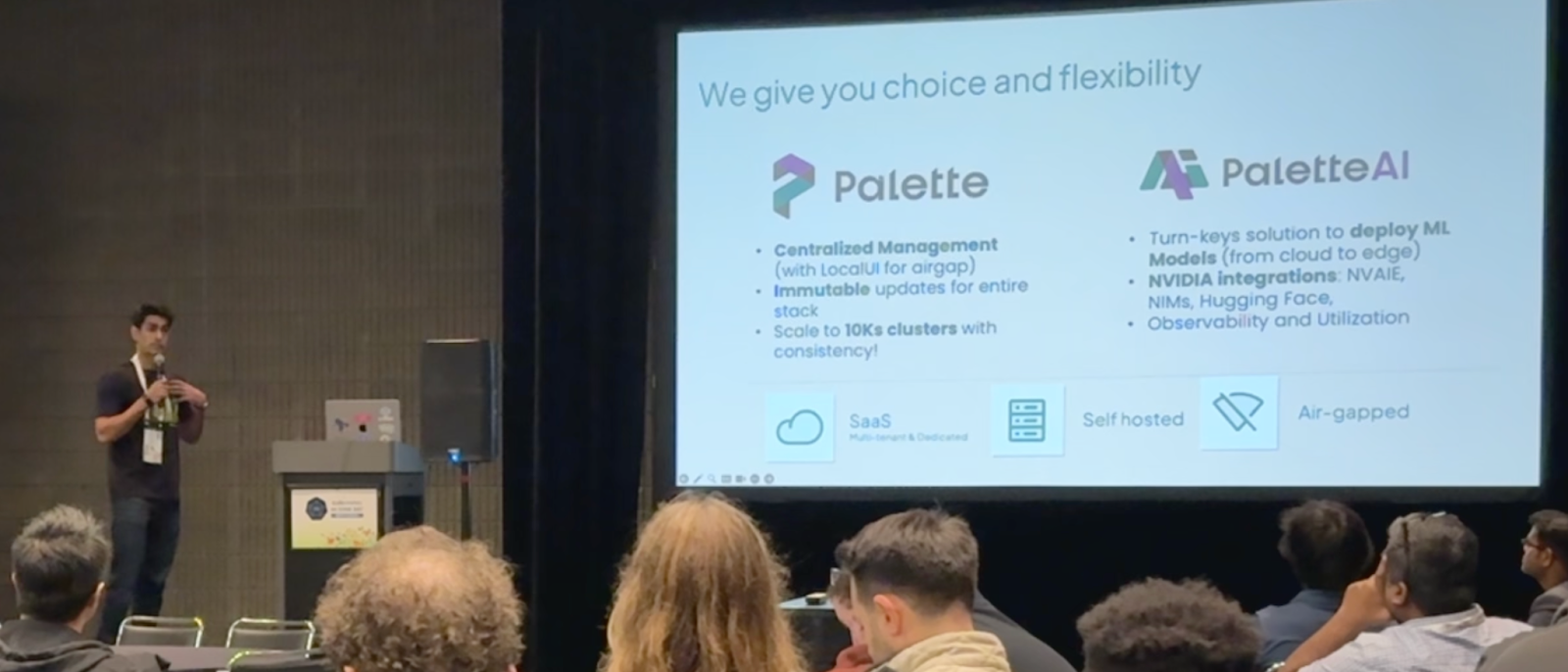

That’s where Spectro Cloud’s Palette platform comes in. Malik kept the product pitch light but made clear that the technology enables organizations to scale and standardize.

“Our solution has been tested up to 10,000-plus clusters from a single management plane,” he said. “And we’re one of the primary sponsors of Kairos, providing immutable updates from the OS all the way to the top of the stack.”

He also introduced PaletteAI, a new offering aimed at helping data scientists and platform engineers deploy models, inference engines, and LLMs across environments — from cloud to data center to edge.

Spectro Cloud, he added, works closely with NVIDIA on technologies like NVAIE, GPU operators, and native model integrations.

“With very expensive hardware, especially GPUs, we make it easy to fully utilize all the resources you have — from a single control plane.”

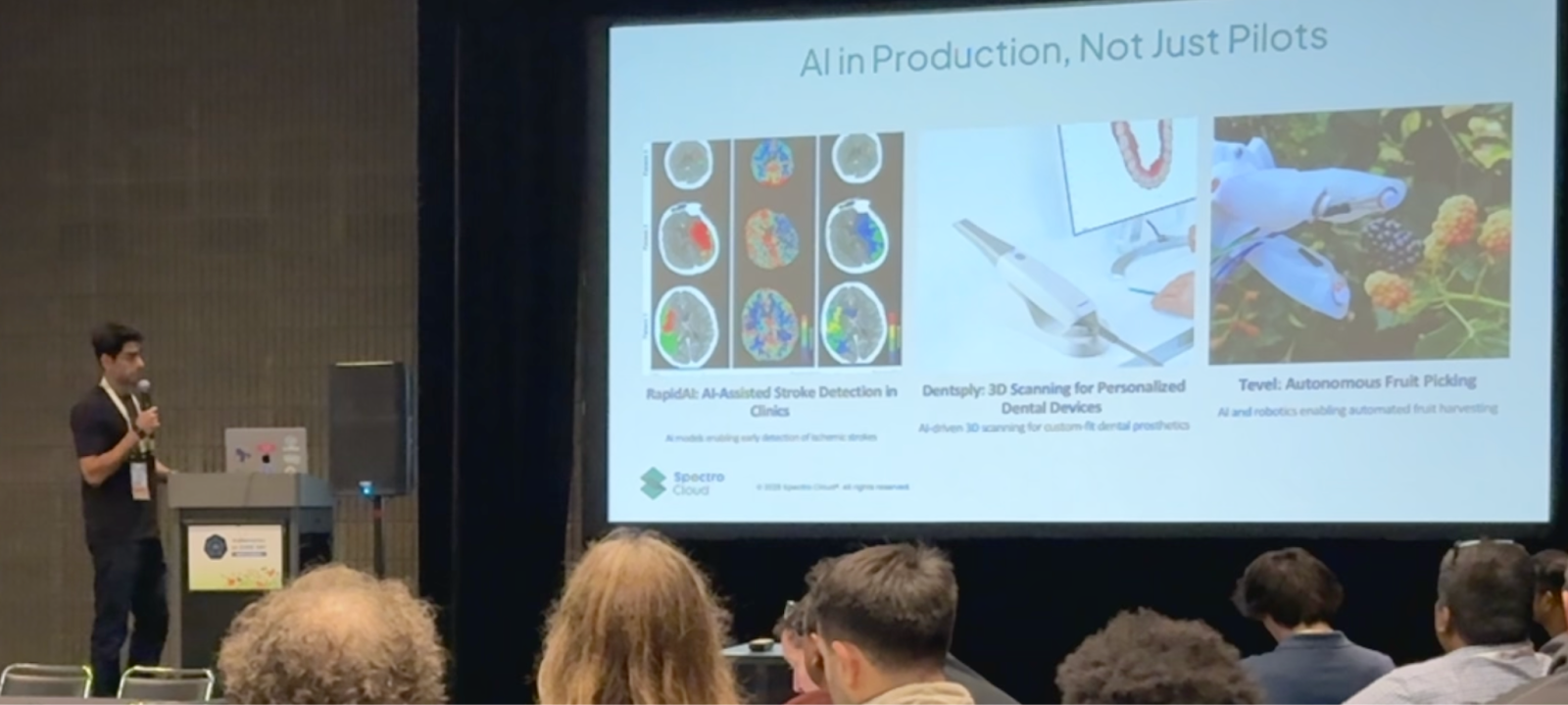

Real-world examples: AI that saves lives and grows fruit

Malik highlighted several Spectro Cloud customers that illustrate just how diverse and powerful edge AI can be.

- RapidAI, he said, is reducing the time to detect ischemic strokes from 18 hours to just 20 minutes, “really saving lives very quickly.”

- Dentsply Sirona uses local image classification to power intraoral scanners that create precise 3D dental models.

- And Tevel, an agricultural robotics company, is using edge AI to detect, classify, and pick fruit autonomously, optimizing harvests in real time.

“These are three different industries,” Malik said, “yet every one of them is where we’re seeing AI at the edge becoming more and more real.”

Making edge AI reliable and scalable

Malik closed his keynote on a pragmatic but hopeful note.

“Edge AI isn’t easy,” he said, “but it is achievable. If you do it with security, if you do it with consistency, and you use cloud-native infrastructure, then you can make it reliable — and you can make it scale.”

If you’re working on your own edge projects, particularly with AI, come visit us at KubeCon all this week at booth 621, or set up a one to one meeting to talk through your project.

To be the first to receive our State of Edge AI report, make sure you’re subscribed to our newsletter.

.avif)

.jpg)