Finally! Simple, declarative orchestration for OCM multi-clusters

Building on Open Cluster Management

We are excited to introduce the fleetconfig-controller, a new addition to the Open Cluster Management (OCM) ecosystem.

The fleetconfig-controller is a Kubernetes operator that acts as a lightweight wrapper around clusteradm. Anything you can accomplish imperatively via a series of clusteradm commands can now be accomplished declaratively using the fleetconfig-controller.

It simplifies the management of multi-cluster environments by introducing the FleetConfig custom resource.

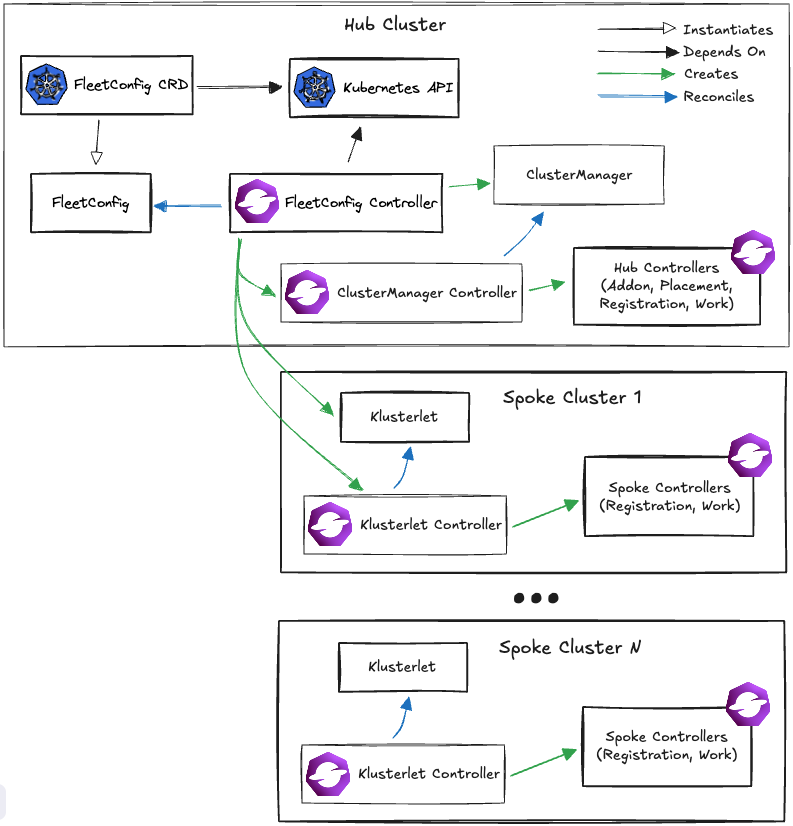

Open Cluster Management (OCM) leverages a "hub-spoke" architecture, where the hub cluster manages multiple spoke clusters, also known as managed clusters or klusterlets.

This architecture decouples computation and decision-making from execution, allowing the hub to declaratively manage the lifecycle of multi-cluster environments without directly interacting with the managed clusters. Instead, managed clusters pull configuration from the hub and perform local reconciliation, ensuring scalability and reducing the hub's workload.

This model allows for efficient management of thousands of clusters, with each klusterlet operating independently — always reconciling against the last-known source of truth, even during network disruptions.

With the fleetconfig-controller, you can declaratively manage the lifecycle of OCM multi-clusters, including initializing the hub cluster and one or more managed clusters. Lifecycle management for OCM add-ons is also supported. Any add-on from OCM's addon-contrib GitHub repository can be installed and enabled on the managed clusters of your choice.

These actions are typically performed imperatively via clusteradm commands or by installing multiple Helm charts: one on the hub and another on each spoke cluster. The lack of a declarative interface for managing the lifecycle of an OCM multi-cluster has several limitations:

- Complexity around bootstrapping and maintenance

- Multi-cluster topology / configuration cannot be managed via GitOps

- Not aligned with Kubernetes best practices

Now, with the fleetconfig-controller, the orchestration of all OCM components is fully automated and GitOps compatible. Check out the KEP for a detailed overview of the motivation and design details. If you want to dive into the code, check out the GitHub repository.

Let’s try out fleetconfig-controller

To get hands-on experience with the fleetconfig-controller, you can try out two different scenarios that demonstrate its capabilities in managing multi-cluster environments. These scenarios will help you understand how the controller orchestrates OCM hubs and managed clusters.

Prerequisites

Before you begin, ensure you have the following installed:

Before beginning either scenario, export your target directory. You will store kubeconfigs there in subsequent steps.

export TARGET_DIR="" # edit this to a directory of your choosing!

mkdir -p $TARGET_DIR

Next, add the Open Cluster Management Helm repository.

helm repo add ocm https://open-cluster-management.io/helm-charts

helm repo update ocmBy default, the Helm chart produces a FleetConfig to reconcile. You can disable this behavior if needed. Refer to the chart README for full documentation. Both scenarios below leverage the default FleetConfig to bootstrap one or more kind cluster(s) into an OCM multi-cluster.

Single kind cluster (hub-as-spoke)

In this scenario, a single kind cluster acts as both the hub and the spoke. This setup is ideal for testing and development purposes, as it simplifies the architecture by consolidating roles into one cluster. It allows you to experience the basic functionalities of the fleetconfig-controller without the complexity of actually managing multiple clusters.

- Create a kind cluster.

kind create cluster --name ocm-hub-as-spoke \

--kubeconfig $TARGET_DIR/ocm-hub-as-spoke.kubeconfig

export KUBECONFIG=$TARGET_DIR/ocm-hub-as-spoke.kubeconfigexport KUBECONFIG=$TARGET_DIR/ocm-hub-as-spoke.kubeconfig

- Install the fleetconfig-controller Helm chart.

helm install fleetconfig-controller ocm/fleetconfig-controller \

-n fleetconfig-system --create-namespace

- Verify the FleetConfig reconciliation.

kubectl wait --for=jsonpath='{.status.phase}'=Running fleetconfig/fleetconfig \

-n fleetconfig-system \

--timeout=10m

Two kind clusters (hub and spoke)

This scenario involves two separate kind clusters, one serving as the hub and the other as the spoke. This architecture more closely resembles a production environment where the hub manages multiple spoke clusters. It demonstrates the fleetconfig-controller handling more complex multi-cluster setups, including the management of connections and configurations between the hub and spoke.

- Create two kind clusters.

kind create cluster --name ocm-hub \

--kubeconfig $TARGET_DIR/ocm-hub.kubeconfig

kind create cluster --name ocm-spoke \

--kubeconfig $TARGET_DIR/ocm-spoke.kubeconfig

export KUBECONFIG=$TARGET_DIR/ocm-hub.kubeconfig- Generate and upload a spoke kubeconfig.

You must prepare an internal kubeconfig for the spoke cluster, which is used as configuration in the hub cluster for it to connect to the spoke cluster. Note that the spoke kubeconfig is only necessary during the bootstrapping process. It can safely be deleted once the spoke cluster has joined the hub. Spoke clusters maintain their connection to the hub cluster automatically after the bootstrapping process is complete.

Issue the following commands to prepare the kubeconfig secret.

kind get kubeconfig --name ocm-spoke \

--internal > $TARGET_DIR/ocm-spoke-internal.kubeconfig

kubectl create secret generic fleetconfig-spoke-kubeconfig \

--from-file=kubeconfig=$TARGET_DIR/ocm-spoke-internal.kubeconfig- Create a minimal values.yaml file for linking the two kind clusters together.

cat <<EOF > values.yaml

fleetConfig:

spokes:

- name: hub-as-spoke

createNamespace: true

kubeconfig:

inCluster: true

klusterlet:

mode: "Default"

purgeOperator: true

forceInternalEndpointLookup: true

- name: spoke

createNamespace: true

kubeconfig:

# spoke kubeconfig secret provisioned during the previous step

secretReference:

name: fleetconfig-spoke-kubeconfig

namespace: default

klusterlet:

mode: "Default"

purgeOperator: true

forceInternalEndpointLookup: true

EOF- Install the fleetconfig-controller Helm chart on the hub.

helm install fleetconfig-controller ocm/fleetconfig-controller \

-n fleetconfig-system -f values.yaml --create-namespace- Verify the FleetConfig reconciliation.

kubectl wait --for=jsonpath='{.status.phase}'=Running fleetconfig/fleetconfig \

-n fleetconfig-system \

--timeout=10mPoking around

Regardless of which scenario you chose (we hope you worked through at least one of them!), there are a handful of notable custom resources that you’ll want to inspect to flesh out your understanding.

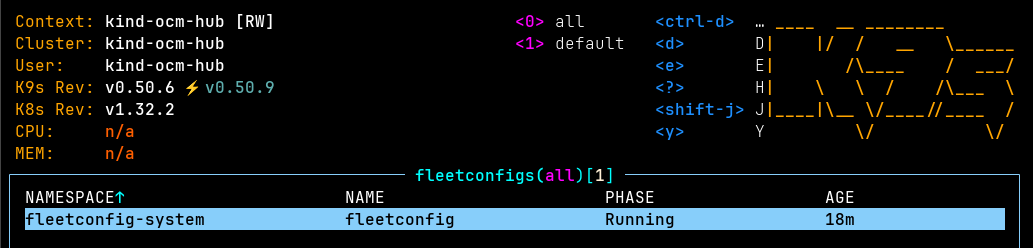

On the hub cluster, you should see a FleetConfig in the Running phase.

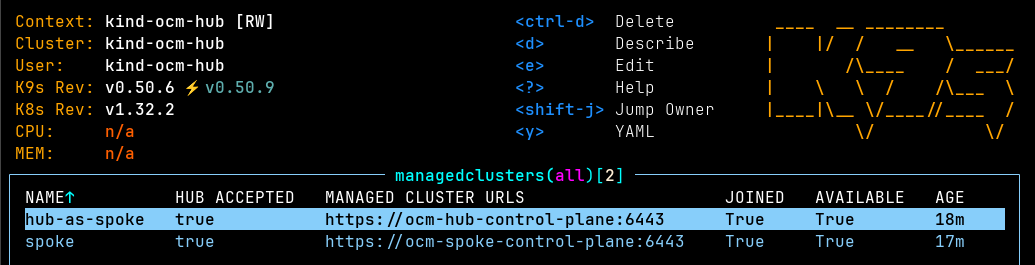

You should also see one or two ManagedClusters on the hub cluster, depending on your chosen scenario.

In both scenarios, the hub cluster will include both a ClusterManager and a Klusterlet. These custom resources each come with their own operators, which in turn install the OCM add-on manager (hub only), placement (hub only), registration, and work controllers.

Finally, now that you have a functional multi-cluster, I’d strongly recommend checking out the user scenarios outlined in the OCM documentation. They’re a great way to play around with OCM concepts, such as how to distribute resources from the hub to one or more spoke clusters and other, more advanced OCM topics.

Conclusion

The fleetconfig-controller is a powerful tool for managing OCM multi-cluster environments. Donated to the OCM community by Spectro Cloud, this contribution underscores our commitment to advancing open-source solutions and fostering collaboration with the Kubernetes and CNCF communities.

We at Spectro Cloud are excited to see how you use the fleetconfig-controller to streamline your multi-cluster management tasks and look forward to contributing further to the OCM community.

.avif)