Effective secrets management in Kubernetes: a hands-on guide

What are secrets, and why do they matter?

A "secret" is an object used to store and manage sensitive information.

There are many types of secrets. They may include passwords, API keys, and other confidential data that applications and services within a cluster may need. Secrets allow you to separate sensitive information from the application's code and configuration files.

In a Kubernetes environment, secrets can be scattered across your cluster, from your applications to your configuration files. They require careful management to prevent breaches and data leaks. The secure handling of secrets is the backbone of application security.

This guide starts with the basics and gets increasingly complex. We'll explore:

- Why secrets are important in Kubernetes

- How to create and use them

- How to secure them in Kubernetes and in external Key Management Systems (KMS).

By the time you've reached the end of this guide, you'll be well-equipped to keep your secrets safe.

Prerequisites

- Access to a Kubernetes cluster: You should have access to a Kubernetes cluster, whether it's a local cluster (e.g., Minikube), a cloud-based Kubernetes service (e.g., GKE, EKS, AKS), or an on-premises cluster.

- kubectl command-line tool: Make sure you have the kubectl command-line tool installed and configured to connect to your Kubernetes cluster. You can configure kubectl with the necessary credentials to access your cluster using kubectl config.

- Cluster administrator or appropriate RBAC roles: You need the necessary permissions to create secrets. In most cases, you need to be a cluster administrator or have appropriate role-based access control (RBAC) roles to create, list, or update secrets.

Why are secrets important in Kubernetes?

Secrets are important in Kubernetes for many reasons:

- Security: Secrets protect sensitive information from being exposed in plain text in configuration files, environment variables, or other places where attackers could access them.

- Isolation: Secrets can help you separate sensitive data from your application code, making it easier to manage them and ensuring that only authorized entities can access the secrets.

- Portability: Secrets can be used by multiple pods, making it easier to manage configurations and credentials consistently across your applications.

How to create secrets in Kubernetes

There are several methods for creating secrets in Kubernetes. Kubernetes resources can also consume these secrets in different ways, depending on your specific use case and preferences.

1. Create a secret from literal values

You can create a secret directly from literal key-value pairs using the kubectl create secret command. This method is useful for creating simple secrets containing a small number of key-value pairs.

kubectl create secret generic my-secret --from-literal=username=myuser --from-literal=password=mypasswordIn this example, we create a secret named my-secret with two key-value pairs: username and password.

2. Create a secret from a file

You can create a secret by specifying the path to the file. This is especially useful for TLS certificates and private keys.

kubectl create secret generic my-second-secret --from-file=cert.crt --from-file=file.jsonIn this example, we create a secret named my-second-secret from the files cert.crt and file.json.

3. Create a secret imperatively

You can also create a secret imperatively using kubectl create secret generic without specifying any data. You can then edit the secret's data using kubectl edit secret.

kubectl create secret generic my-secret

kubectl edit secret my-secretThis will open the secret in your default text editor, allowing you to add or modify the key-value pairs.

4. Create a secret declaratively

You can create a secret declaratively by defining it in a YAML file and applying it to your cluster. This method is useful for managing secrets as code and automating their creation. Here's an example of a YAML file for creating a secret:

apiVersion: v1

kind: Secret

metadata:

name: my-secret

type: Opaque

data:

username: dXNlcm5hbWU=

password: cGFzc3dvcmQ=Apply this YAML file using kubectl apply -f secret.yaml, and it will create a secret named my-secret with the specified data.

For the secrets we have created above, they can be used in pods, deployments or other Kubernetes resources as volumes, environment variables, or other custom solutions. You can read the official Kubernetes documentation to read more about how you can use Secrets in your Kubernetes resources.

The challenge: Kubernetes secrets are not inherently secure

Secrets created in Kubernetes using the methods above are not inherently secure.

When you create a secret, Kubernetes creates and stores a base64 encoding equivalent of the data used in creating the secret. This base64 encoded secret can be decoded by anyone who has access to it.

Say, for instance, you create a JSON file named docker-creds.json with your Docker credentials as shown below:

{

"auths": {

"https://hub.docker.com/": {

"username": "test_username",

"password": "test_password"

}

}

}You then go ahead and create a secret from this JSON file:

kubectl create secret generic mysecret --from-file=docker-creds.jsonThe command creates a Kubernetes secret named mysecret from docker-creds.json file.

To decode this secret, one just needs to run the following command to get access to your username and password:

kubectl get secret mysecret -o jsonpath='{.data.docker-creds\.json}' | base64 --decodeThis command gets the encoded data of the docker-creds.json file from the mysecret secret using jsonpath, then uses base64 to decode the data and print it to the terminal in plain text format.

For this reason, we need to manage secrets effectively and efficiently to avoid leaking sensitive information to unauthorized users.

Why secrets management is important

Several critical factors drive the urgency of effective secret management in Kubernetes:

- Security: Secrets are the keys to the kingdom. Any compromise can lead to catastrophic data breaches. Kubernetes' default setup doesn't ensure secrets are invulnerable, so you need to be proactive in securing them.

- Compliance: In many industries, compliance with data protection regulations is not an option; it's a legal requirement. Insufficient secrets management can lead to costly fines and legal repercussions.

- Operational efficiency: Proper secrets management simplifies your operations. It reduces the risk of unexpected outages due to mismanaged secrets and ensures that your applications are consistently available and secure.

The solution: dual envelope encryption

The solution to the challenge we discussed above is Dual Envelope Encryption. The Dual Envelope Encryption scheme is a security practice that involves using two layers of encryption to protect sensitive data.

In the context of Kubernetes, it means that secrets are encrypted twice, once by Kubernetes itself at rest and once in transit by an external encryption mechanism.

Kubernetes provides encryption at rest for secrets through its EncryptionConfig feature. To enable this, you need to configure the encryption provider and associate it with your Kubernetes cluster. This ensures that secrets are encrypted before they are stored in etcd. We will have a deeper look at this in the next section.

The Dual Envelope Encryption comes into play when you add an extra layer of encryption for secrets before they are stored in Kubernetes.

This can be achieved using client-side encryption tools like sops or external Key Management Services (KMS). We will also discuss this extensively in this article.

Encrypting secrets at rest (Etcd)

Etcd is a distributed key-value store that serves as the primary data store for Kubernetes. It acts as a central registry, storing configuration data, key-value pairs, and, importantly, secrets for your Kubernetes cluster.

Etcd ensures that essential information about the cluster's state is distributed and consistent, making it a fundamental component in the Kubernetes ecosystem.

Encrypting secrets in etcd is an important security measure to protect sensitive data.

Most Kubernetes distributions, like those provided by major cloud providers, come with etcd data encryption enabled by default. If you're setting up your own Kubernetes cluster, you’ll need to enable etcd encryption. Here’s how to enable etcd encryption if you are running your own Kubernetes cluster:

1. Back up your etcd data (optional but highly recommended)

Before making any changes, it's crucial to create a backup of your existing etcd data. This backup ensures that you can recover your data if something goes wrong during the encryption process.

2. Enable EncryptionConfig in Kubernetes

Here’s how to enable EncryptionConfig in your Kubernetes configuration:

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

controllerManager:

extraArgs:

encryption-provider-config: /etc/kubernetes/encryption-provider-config.yamlIn the above example, we specify the path to an encryption provider configuration file.

3. Create an encryption provider configuration file

We will then create an encryption provider configuration file (e.g., encryption-provider-config.yaml) that specifies the encryption configuration. This file will define the encryption provider and the settings for encryption at rest. The exact format and settings may vary depending on the encryption provider you choose.

Here's an example of an encryption-provider-config.yaml file for using the "aescbc" encryption provider:

kind: EncryptionConfig

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: YOUR_BASE64_ENCODED_KEYIn the example above, resources define the resources to encrypt. In this case, we specify "secrets." while providers specify the encryption provider.

The example uses "aescbc" (AES in Cipher Block Chaining mode). keys configure one or more encryption keys. Replace YOUR_BASE64_ENCODED_KEY with the actual base64-encoded encryption key.

4. Apply and verify the configuration and encryption

Apply the configuration to your Kubernetes cluster using the kubeadm command or by modifying your cluster configuration. Update the API server configuration to reference the encryption configuration file. This step ensures that etcd encrypts secrets as they are stored.

kubeadm init phase control-plane apiserver --config /etc/kubernetes/manifests/kube-apiserver.yamlAfter configuring encryption at rest, you can verify that secrets are encrypted before being stored in etcd. Create or update secrets and ensure that they are stored in their encrypted form.

You can also inspect the contents of etcd directly to confirm that secrets are encrypted when stored. However, be cautious when interacting with etcd directly, as it can impact the stability of your Kubernetes cluster.

5. Restart etcd and verify encryption:

After modifying the etcd configuration, restart the etcd service to apply the changes.

systemctl restart kube-apiserverCheck the etcd logs and monitor its status to ensure that encryption works correctly. Look for any error messages in the logs. Also, verify that you can still access your data after enabling etcd encryption. Encrypting the data may change the way data is stored and retrieved.

6. Make regular backups

Maintain regular backups of your etcd data even after enabling encryption. Data loss can still occur for various reasons, and backups are your safety net. Keep a secure backup of your encryption keys and ensure they are well-protected. Also, consider implementing key rotation procedures and other security best practices for your encryption keys.

Note: Etcd encryption secures data at rest; it does not protect data in transit.

External secret encryption (Dual Envelope Encryption)

Secret encryption and management in Git

You should be concerned about secrets in Git because failing to secure sensitive data right before Git commits can expose them in Git repositories or their history, potentially leading to severe security vulnerabilities and reputational damage. Secrets can be accidentally revealed in Git history in commit messages, logs, and diffs, or branches and tags.

Prioritizing secret encryption in Git is fundamental to preserving the privacy and security of your codebase and the sensitive information it may contain.

Tools for encrypting secrets in Git

In this section, we will discuss a few tools and methodologies for encrypting Kubernetes secrets in Git.

Secrets Operations (SOPS)

SOPS (Secrets OPerationS) is a tool for managing and encrypting secrets in Kubernetes and other environments. It is versatile and can encrypt and manage secrets stored in various file formats, including YAML, JSON, and more.

SOPS is handy for Kubernetes because it allows you to store sensitive configuration data, such as API keys, passwords, or certificates, in a secure and encrypted manner.

To use SOPS for encryption of your secrets or sensitive information in Kubernetes, take the following steps:

1. Install SOPS

First, you need to install SOPS on your local machine and any systems where you intend to use it. You can install SOPS using package managers like brew, apt, or download the binary from the GitHub releases page.

ORG="mozilla"

REPO="sops"

latest_release=$(curl --silent "https://api.github.com/repos/${ORG}/${REPO}/releases/latest" | grep '"tag_name":' | sed -E 's/.*"v([^"]+)".*/\1/')

# AMD64

curl -L https://github.com/mozilla/sops/releases/download/v${latest_release}/sops_${latest_release}_amd64.deb -o sops.deb && sudo apt-get install ./sops.deb && rm sops.deb

# ARM64

curl -L https://github.com/mozilla/sops/releases/download/v${latest_release}/sops_${latest_release}_arm64.deb -o sops.deb && sudo apt-get install ./sops.deb && rm sops.deb

#macOS

brew install sops Check out the official SOPS repository on GitHub.

2. Install GPG and generate a GPG key

GPG (GNU Privacy Guard) is an open-source encryption software that is released under the GNU General Public License (GPL). It is widely used for secure communication, key generation, and data encryption to encrypt sensitive data. Encrypted data can only be decrypted by individuals with the appropriate decryption key. If you do not have GPG installed, you need to install it to create a key that will be used in the encryption of the secrets.

# Debian/Ubuntu

sudo apt update

sudo apt install gnupg

# RedHat/CentOS

sudo yum install gnupg

#Fedora

sudo dnf install gnupg

#macOS

brew install gnupgTo generate a GPG key, use the --gen-key command to generate a new key as shown:

gpg --gen-keyThis command will take you through a step-by-step process to create your public and private keys for encryption.

Note: GPG is not the only tool or technique available to you for creating keys. You can also use OpenSSL or any other tools that suit your needs.

3. Create a SOPS configuration file

first_pgp_key=$(gpg --list-secret-keys --keyid-format LONG | grep -m1 '^sec' | awk '{print $2}' | cut -d '/' -f2)

cat <<EOF > .sops.yaml

creation_rules:

- encrypted_regex: "^(data|stringData)$"

pgp: >- ${first_pgp_key}

EOFThe snippet is used to create a .sops.yaml configuration file for SOPS. The .sops.yaml file is used to specify how sops should handle the encryption and decryption of files, particularly for managing secrets. Here is a breakdown of the snippet:

- first_pgp_key=$(gpg --list-secret-keys --keyid-format LONG | grep -m1 '^sec' | awk '{print $2}' | cut -d '/' -f2)

This command extracts the fingerprint of the first PGP secret key found in the GPG keyring.

gpg --list-secret-keys --keyid-format LONG lists the secret keys in GPG with long-format key IDs.

grep -m1 '^sec' filters the output to find the first line starting with 'sec', which indicates a secret key.

awk '{print $2}' extracts the second field from the filtered line, which is the key ID and fingerprint.

cut -d '/' -f2 Splits the key ID by '/' and selects the second part, the fingerprint.

- cat <<EOF > .sops.yaml

This part of the code starts overwriting the .sops.yaml file in the current directory. The contents of the .sops.yaml configuration file will be generated based on the next few lines.

- Creation_rules:

Creation rules specify how sops should encrypt files.

- encrypted_regex: "^(data|stringData)$"

This line instructs sops to encrypt files that match the regular expression specified within double quotes. The regular expression "^(data|stringData)$" matches file names exactly equal to "data" or "stringData." In Kubernetes, these are common keys used in secrets.

- pgp: >- ${first_pgp_key}

Specifies the encryption method for the matched files. It indicates that the PGP encryption method should be used. ${first_pgp_key} refers to the fingerprint variable we had created earlier, making it the encryption key for these files.

The output of this entire snippet is a .sops.yaml file that looks like this:

creation_rules:

- encrypted_regex: "^(data|stringData)$"

pgp: >-

ABCDEFGHIJKL...4. Create your secret and encrypt it with sops

kubectl create secret generic my-secret --from-literal=username=myuser --from-literal=password=mypassword --dry-run=client -ojson | sops -e /dev/stdin -i - -o new-sec.enc.jsonThe sops -e /dev/stdin -i - -o new-sec.enc.json tells SOPS to encrypt the data from standard input (/dev/stdin) and then write the encrypted result to the new-sec.enc.yaml file. Your output can be in YAML or JSON, depending on the output type you choose.

Note: If you have created your secret file, say my secret.yaml you can simply encrypt it with sops.

sops -e -i my-secret.yamlThis command will open the YAML file in your default text editor for you to enter the encryption key. You can use various methods, such as GPG, AWS KMS, or age encryption, to encrypt your data. Choose the encryption method that suits your needs.

Pre-commits and GitLeaks

Pre-commit hooks are small scripts or commands executed before a Git commit is allowed to proceed. They are used to perform various checks and tasks on your code to ensure its quality and consistency before it becomes part of the project's version history.

Pre-commit hooks can be used to perform tasks such as code formatting, linting, code style checks, and security checks to prevent sensitive information from being committed to a Git repository.

GitLeaks is a tool designed to prevent sensitive information from being committed to Git repositories. It scans your Git repositories for secrets or sensitive data and alerts you when such data is found in your codebase.

Pre-commit and Gitleaks can work together to enhance the security of your Git repositories by preventing sensitive data from being committed.

When Pre-commit is configured with Gitleaks as a pre-commit hook, Gitleaks scans the code changes for potentially sensitive information, such as passwords or API keys.

If any sensitive data is detected during the pre-commit check, the commit process is halted, and you're prompted to address and rectify the issues.

To use Pre-commit with GitLeaks, follow these steps:

1. Install Pre-commit

Pre-commit is a Python-based tool, so you'll need to have Python installed on your system to use it. Here is how to install Pre-commit:

pip install pre-commit2. Install Gitleaks

You can install GitLeaks using package managers like Homebrew, or directly download and build the source code from the GitLeaks GitHub repository.

#macbook

brew install gitleaks

#Linux

sudo apt update

sudo apt install gitleaks

# (OR)

git clone https://github.com/zricethezav/gitleaks.git

cd gitleaks

go build -o gitleaks

sudo mv gitleaks /usr/local/binVerify that gitleaks was installed by running:

gitleaks --version2. Create a Pre-commit hook

Navigate to the root directory of your Git repository and create a .pre-commit-config.yaml file in a text editor and define pre-commit hooks you want to use. In our example, we will use Gitleaks.

repos:

- repo: https://github.com/gitleaks/gitleaks

rev: v8.16.2

hooks:

- id: gitleaks4. Install the Pre-commit hooks:

To install the hooks defined in your .pre-commit-config.yaml file, run the following command:

pre-commit install5. Run the Pre-commit hooks

You can now run the pre-commit hooks by running the following command:

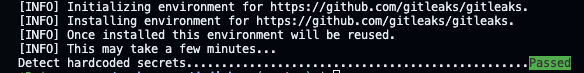

pre-commit run --all-filesPre-commit will check your changes before they are committed. If any issues are detected, it will prevent the commit from going through, and you'll need to address the issues before committing again. If your secrets are safe, you should get similar results as shown:

Environment variables

Managing secrets with environment variables in both development and production environments in Kubernetes is a common practice.

With environment variables, you do not necessarily secure data; rather, you reference already encrypted secrets to keep them away from being hard-coded.

Using environment variables in Kubernetes to secure sensitive information is not the most secure method, as they can still be exposed in logs, process lists, or other ways.

In this context, we’d instead create a secret or ConfigMap and reference it as an environment variable in a Kubernetes resource. Here’s how to do this:

1. Create a Kubernetes secret

Use any of the methods earlier listed like sops to store your sensitive information. Use a ConfigMap to store environment variables that don't contain sensitive information. This keeps your secrets separate from your regular configuration.

2. Encrypt the secret with your tool of choice

Note that encrypted secrets have keys that can be referenced in a similar way as unencrypted ones.

3. Use the secret as an environment variable in a Kubernetes resource as shown:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 1

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app-container

image: my-app-image:latest

env:

- name: MY_SECRET

valueFrom:

secretKeyRef:

name: my-encrypted-secret

key: secret-data4. Deploy your configuration

Apply the configuration to your Kubernetes cluster using kubectl apply -f your-config.yaml.

5. Decrypt the secret in the container

In your application code, you'll need to handle the decryption of the environment variable that was set from the encrypted secret. This typically involves using a library or tool to decrypt the secret in your application code.

The decryption method and library you use will depend on how you encrypt the secret in the first place. For example, if you used `sops`, you'd typically use the `sops` client library to decrypt the secret in your code.

Secret encryption with external KMS

An external Key Management Service (KMS) is a centralized system designed to create, store, manage securely, and audit encryption keys. It provides a trusted and secure environment for managing cryptographic keys and performing encryption and decryption operations.

KMS services are offered by various cloud providers, including AWS KMS, Google Cloud KMS, and Azure Key Vault, as well as third-party solutions like HashiCorp Vault.

Synchronizing secrets between a Kubernetes cluster and an external KMS is a huge aspect of securely managing sensitive data in cloud-native applications.

This approach allows you to leverage the security and management capabilities of an external KMS to protect your secrets while still making them accessible to your Kubernetes workloads.

Why use an external KMS?

Kubernetes provides native mechanisms for secret management, but there are cases where using an external KMS is advantageous:

- Enhanced security: External KMS solutions often provide robust security features, hardware security modules, and centralized access control, which can be challenging to replicate within a Kubernetes cluster.

- Compliance requirements: Many industries and regulatory bodies have stringent requirements for data protection and access control. Using an external KMS can help meet these compliance standards.

- Multi-cloud support: External KMS solutions can be cloud-agnostic, allowing you to manage secrets consistently across multiple cloud providers.

- Centralized management: You can manage secrets consistently across multiple Kubernetes clusters or environments, and you have a single control point for secrets.

Synchronizing secrets between Kubernetes and an external KMS

Let’s walk through examples using the AWS and Google Cloud KMSs for secrets synchronization.

1. Set Up Google/AWS KMS

Creating an external Google Cloud or AWS Key Management Service (KMS) integration in Kubernetes involves using a Kubernetes Secrets Store CSI Driver with the gcp-kms or aws provider to access secrets stored in Google Cloud KMS.

For Google Cloud, you need to create a KeyRing and CryptoKey in Google Cloud KMS first. You can do this using the Google Cloud Console, gcloud command-line tool, or API. Make a note of the CryptoKey's fully-qualified name (FQN).

# Create a KeyRing

gcloud kms keyrings create my-keyring --location global

# Create a CryptoKey within the KeyRing

gcloud kms keys create my-cryptokey --location global --keyring my-keyring --purpose encryptionFor AWS, you need to create an AWS KMS key in your AWS account. You can do this through the AWS Management Console or by using the AWS CLI. Make a note of the key's Amazon Resource Name (ARN), as you'll need it in the Kubernetes configuration.

2. Install and Configure the Google Cloud/AWS Secrets Store CSI Driver

The Secrets Store CSI Driver is a Container Storage Interface (CSI) driver for Kubernetes that enables access to secrets stored in an external KMS. You can install it using Helm and a values.yaml file.

#Google Cloud

helm repo add secrets-store-csi-driver https://raw.githubusercontent.com/kubernetes-sigs/secrets-store-csi-driver/charts

helm install csi-secrets-store secrets-store-csi-driver/secrets-store-csi-driver -f values.yaml

#AWS

helm repo add aws-secrets-store-csi-driver https://raw.githubusercontent.com/kubernetes-sigs/aws-secrets-store-csi-driver/release

helm install aws-secrets-store-csi-driver/aws-secrets-store-csi-driver --generate-name -f values.yamlMake sure to customize the values.yaml file with your Google Cloud/AWS KMS settings and Secrets Manager details.

3. Create a Kubernetes SecretStore custom resource

Define a SecretStore custom resource that specifies which secrets you want to retrieve from Google Cloud/AWS KMS. Here's an example:

#Google Cloud

apiVersion: secrets-store.csi.x-k8s.io/v1alpha1

kind: SecretProviderClass

metadata:

name: gcp-kms-provider

spec:

provider: gcp-kms

parameters:

objects:

- objectName: my-secret

objectType: secret

objectVersion: latest

secretObjects:

- secretName: my-secret

type: Opaque

#AWS

apiVersion: secrets-store.csi.x-k8s.io/v1alpha1

kind: SecretProviderClass

metadata:

name: aws-secrets-store

spec:

provider: aws

parameters:

objects:

- objectName: my-secret

objectType: secret

objectVersion: AWSCURRENT

secretObjects:

- secretName: my-secret

type: Opaque4. Create a Kubernetes Resource

Now, you can create a Kubernetes resource that references the SecretProviderClass you created. In this example, we will use a pod. The pod can access the secrets synchronized from Google/AWS Secrets Manager.

#Google Cloud

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: my-image

volumeMounts:

- name: secret-volume

mountPath: /path/to/secrets

volumes:

- name: secret-volume

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: gcp-kms-provider

#AWS

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: my-image

volumeMounts:

- name: secret-volume

mountPath: /path/to/secrets

volumes:

- name: secret-volume

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: aws-secrets-storeIn this example, the secretProviderClass references the SecretProviderClass created earlier.

Now you can deploy the Pod. The pod will have access to the secret synchronized from Google Cloud or AWS KMS, and it will be mounted at the specified path.

The Secrets Store CSI Driver handles the synchronization and retrieval of the secrets, and Google Cloud KMS ensures their encryption and decryption.

RBAC: the key to safe secrets access

You’ve encrypted your secrets. Now what?

Applications and services need to use them, with the right permissions. That’s where Role-Based Access Control (RBAC) comes in.

Roles, authentication and authorization

RBAC is a fundamental feature of Kubernetes that governs who can access and perform actions on various resources within the cluster.

Secrets, which store sensitive information like credentials and keys, are subject to RBAC rules to ensure that only authorized entities can access and manage them.

Kubernetes uses RBAC to handle authentication and authorization. Authentication verifies the identity of users and services, while authorization defines what actions they are allowed to perform. RBAC rules are essential for securing access to resources like secrets.

Service accounts with RBAC

Service accounts are also a fundamental component of RBAC in Kubernetes. Pods use service accounts to authenticate and access other resources, including secrets.

When a pod is created, it is associated with a service account that defines its permissions. Service accounts are used to represent the identity of a pod or a set of pods. You need to create service accounts for the pods that require access to secrets.

For example, if you have a pod that needs to read secrets, you can create a service account for it:

apiVersion: v1

kind: ServiceAccount

metadata:

name: my-app-saSecrets in Kubernetes are stored as resources, and RBAC rules apply to them. RBAC policies define which service accounts or users can read or write specific secrets.

This access control ensures that only authorized pods and services can access sensitive information stored in secrets.

From roles to role bindings

Suppose you have a secret containing a database password. You can create an RBAC role and role binding that grants read access to a specific service account. Pods using this service account can then access the secret to establish a database connection.

# Example RBAC Role

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: secret-reader

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]Role bindings associate service accounts with RBAC roles, allowing them to perform the actions specified in the roles. For instance, you can bind the "secret-reader" role to the "my-app-sa" service account:

# Example RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: read-secrets

subjects:

- kind: ServiceAccount

name: my-app-sa

roleRef:

kind: Role

name: secret-reader

apiGroup: rbac.authorization.k8s.ioIn this example, the "secret-reader" role allows the service account "my-app-sa" to get and list secrets. Only the pods associated with the "my-app-sa" service account will be able to read secrets, as defined by the "secret-reader" role.

In your pod specifications, specify the service account that the pod should use. Pods associated with the service account inherit the permissions defined by the role bindings.

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

serviceAccountName: my-app-sa

containers:

- name: my-container

image: my-image

# ...RBAC rules can be fine-grained to control access to specific secrets or groups of secrets. This ensures that even within a single namespace, different pods or service accounts have different levels of access to secrets.

You can also integrate RBAC roles into your CI/CD pipelines to ensure that only the necessary components have access to secrets during deployments. Secrets can be automatically injected into pods that need them, and RBAC helps control which pods are eligible for this.

Conclusion: secrets are too important to risk

In this piece, we have taken a deep dive into Kubernetes secret management and encryption to ensure the utmost security of your containerized applications.

We began by exploring the concept of Kubernetes secrets, their significance, and the potential risks associated with their mishandling.

To address these concerns, we covered various aspects of secret management, starting with the different methods of creating and consuming secrets in Kubernetes. We provided practical examples for creating secrets using YAML definitions and consuming them through environment variables and volume mounts within pods.

We discussed secret encryption "at rest" and "in transit." Kubernetes natively handles encryption at rest using EncryptionConfig, providing security for secrets stored in etcd. We discussed how to enable encryption at rest and verify its implementation.

For an extra layer of protection, we introduced the concept of Dual Envelope Encryption, whereby secrets are encrypted twice: once by Kubernetes at rest and again by an external mechanism or Key Management Service (KMS) in transit. This approach adds a robust layer of security, making it more challenging for unauthorized entities to access sensitive information.

We also explored the potential vulnerabilities of storing secrets in version control repositories like Git, including encrypting secrets before committing them to Git repositories.

If your enterprise uses Hashicorp Vault to manage secrets, Palette makes it easy to install Vault in each cluster out of the box. Remember that managing secrets effectively is just one part of a wider infrastructure security strategy. For some ideas on how we can help you tackle the bigger picture in your Kubernetes environment, check out our security page.

.avif)

.jpeg)