Portworx is a modern, distributed, cloud native storage platform designed to work with containers and microservice and so is a popular choice for storage management in Kubernetes. It abstracts storage devices to expose a unified, overlay storage layer to cloud-native applications. Portworx has a free license that comes with limited functionality and an enterprise license with full functionality.

You can deploy Portworx on the Kubernetes cluster using any of the following methods below

- Manifest

- Portworx operator

- Helm charts

In this blog, we will walk through the manifest approach on how to set up Portworx on a Kubernetes cluster deployed in VMware’s vSphere environment.

Pre-requisites

- Kubernetes cluster (with at least 3 workers)

- Kubectl

- Access to PX-Central

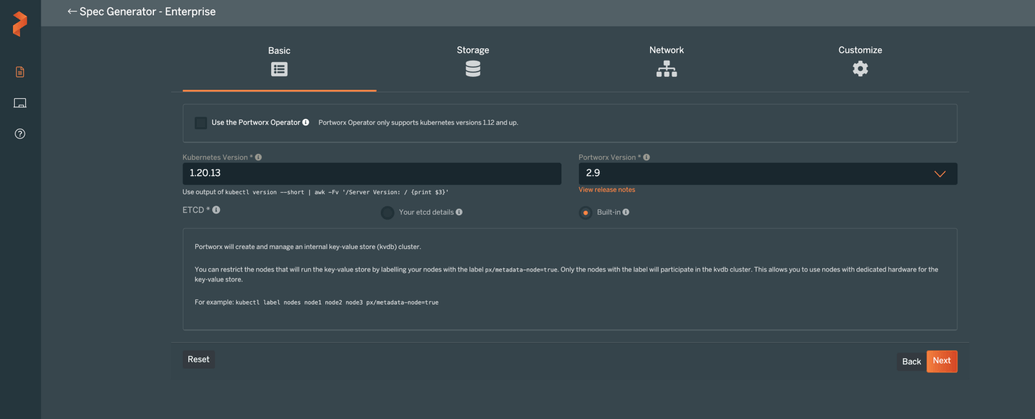

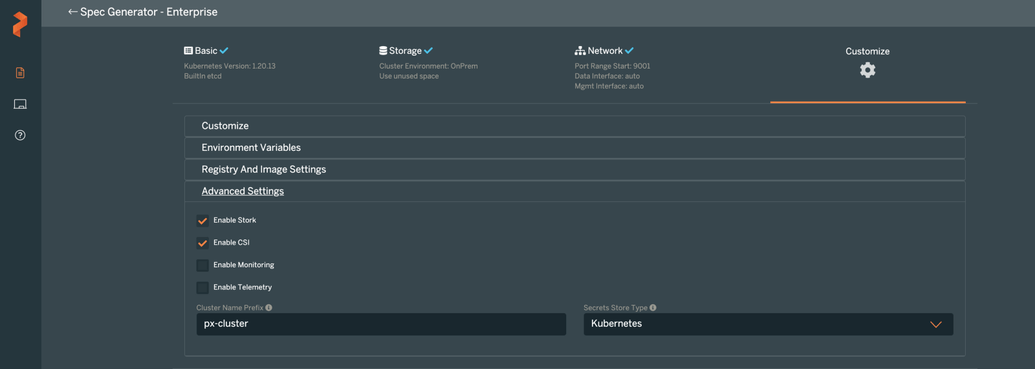

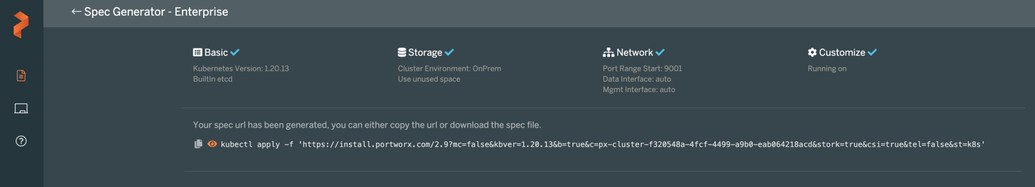

- Log in PX-Central, which is a web-based interface for generating Portworx spec. Select Portworx enterprise (which gives a 30-day trial) and fill in the additional fields matching your cluster. See below for the example values on how to generate a spec for Kubernetes cluster v1.20.13 for deployment on VMWare vCenter. As you can see in the screenshot below, Portworx provides a lot of options to customize like internal vs external ETCD, how you want to supply the disks to use, enabling stork, CSI & monitoring, etc.

Basic Portworx settings

At this point, you can download the spec by clicking on the link provided. The spec generated will contain the following Portworx components:

- Portworx

- Autopilot

- Stork *

- CSI *

- Monitoring *

Note that components with * are included in the spec, only when they are enabled

- Generating spec through a curl endpoint:In the target cluster, create the secret that will contain the vsphere user credentials like shown below:

Create a secret that will have vcenter user credentials

Set the vSphere environment details:

Note for disk template parameters

type: Supported types are thin, zeroedthick, eagerzeroedthick, lazyzeroedthick

size: This is the size of the VMDK in GiB

Generate the spec file using the commands below

You could override any of the settings in the spec file at this point. Apply the px-spec generated on your kubernetes cluster by running

In a few minutes, you should see portworx components getting deployed on the cluster. You can check the status of the portworx pods by running. Wait until the Pod goes to Running state.

Once the pods are ready, you can check the Portworx cluster status using the command below:

pxctl is the go-to utility for almost anything in portworx.

For dynamic provisioning of volumes, use portworx provisoner in the storage class. An example is provided below.

Note that this storage class is set as default.

With this storage class, any stateful applications deployed in the Kubernetes cluster should get volumes provisioned. Below is an example manifest to test the dynamic provisioning of volumes in the Kubernetes cluster:

When the above resource is applied to the Kubernetes cluster, persistent volumes should get created and should be in Bound status.

This sums up the introduction of how to deploy Portworx using manifest for dynamic provisioning of volumes. Stay tuned for more!

Useful links:

- Data services for database containers & stateful containersRead the GigaOm Radar The foundation of The Portworx Storage Platform for Kubernetes Built from the ground up for…portworx.com

- Persistent storage for containersPortworx is persistent storage for containers. Get data services for stateful containers including HA, snapshots…central.portworx.com