Secure software artifact sharing: the missing link in Kubernetes platform security

Software? Trust me bro

It never fails. Every time I sit down to build something — usually a server of some sort — I discover I’m missing one critical piece of software.

When I started my career as a system administrator in the 1990s, software delivery was at best fragile. Some applications shipped on dozens of floppy disks, and it was almost guaranteed that I’d misplace one. CD-ROMs eventually reduced clutter but introduced their own human-caused risks, such as accidental scratches to discs.

Of course, back then, we rarely worried about the trust we had assumed with vendor-provided software. We never had to worry about software authenticity as long as it was on official disks, whether floppy or CD-ROM.

The human errors did not help with security, either. I still remember updating a network driver from a well-known vendor-supplied floppy that turned out to contain the NATAS virus. So much for trusting a reliable source. I began to realize that I couldn’t trust what vendors shipped to me. I swivelled that trust to the Internet and the resources I found there.

With the growth of the internet, it might have been easier to get a piece of software (if you could handle the slow downloads), but it never really addressed the main issues around acquiring trustworthy software. Essentially, new obstacles simply replaced the old ones. Teams have always needed to validate the integrity and authenticity of the software they use.

Digital signatures and code signing for operating systems and applications have existed for many years. Around the early 2000s, processes such as Software Bill of Materials (SBOM) and secure supply chains began to emerge. Yet, none of these measures gained widespread adoption until the 2010s.

Regardless, teams sought to impose order on this chaos and address the lingering sense of insecurity. Centralized file servers and virtual machines were adopted to provide greater control over software and to enable tightly managed templates. These strategies were somewhat effective until the rise of containers fundamentally changed the landscape.

Different era, same anxiety.

So, as we moved from slow-evolving monolithic software to thousands of microservices dependent on an ever-shifting landscape of open source packages and libraries and projects, the SBOM becomes a tangled mess.

There could be security threats lurking three steps away that end up installed on your systems. And, as a result, it became easier to forget not just what you needed, but where it came from. However, this wasn’t just a personal problem anymore. It affected entire teams, multiple environments, multiple clouds, and sometimes multiple organizations.

At that point, the real challenge wasn’t downloading software. It was knowing:

- What you shipped

- Where it went

- Who was using it

- And whether it still met security and best-practice expectations.

Playing our part to keep your clusters safe

The complex challenges of software trust need multifaceted solutions.

For our part, our Palette management platform can play an important role as a controller of what software makes it into your Kubernetes clusters.

That’s why for years we have implemented SBOM scanning in Palette, giving you auditability at point of deployment.

It’s why you, as a platform team, can use Palette’s granular RBAC to restrict your overexcited developers, limiting them to only deploying from proper governance-approved Cluster Profiles; and it’s why our VerteX edition for regulated industries can even limit installation of non-FIPS packs.

These are all features that we have implemented to help admins control the software risks that they're deploying to their Kubernetes environments.

As an organization, we also know it’s important to play our part in the wider community. So why we've joined the openSSF, which shepherds projects like SLSA, sigstore and a host of SBOM components. My dear Spectro Cloud colleague Will Crum is also now co-chair of the Public Sector User Group in the CNCF, which recently published a white paper on software supply chain security.

Yes, Spectro Cloud is also a software publisher. It can be either a part of the problem, or it can be part of the solution. It’s why, when Spectro Cloud introduced Artifact Studio, my reaction was immediate: they built this for the kind of admin I am.

Artifact Studio: our way of offering software you can trust

The Spectro Cloud Artifact Studio is a unified experience that helps airgapped, regulatory-focused, and security-conscious organizations populate their registries with bundles, packs, and installers that will be needed for self-hosted Palette, Palette VerteX or PaletteAI.

Artifact Studio provides all the software you might want to install on the clusters you create with Palette (operating systems, K8s distributions, service meshes, observability, etc) from our own repositories for you to pull from. It also provides all the software that you can download locally to host in your own repositories, in your airgapped or private environment. And it provides a single location for packs and images, streamlining access and management.

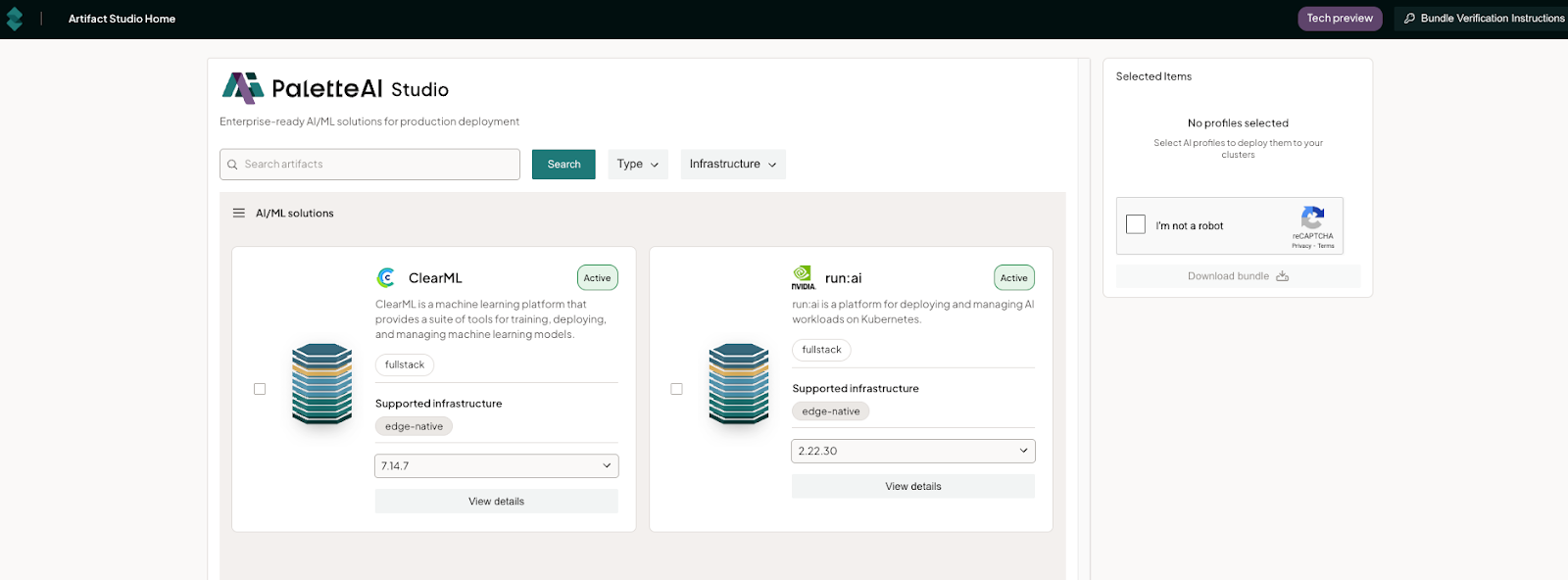

Now, I can go to one location, select the versions I need for Palette or VerteX, choose the required hardware version, download them, and verify their authenticity. And if my developers need their own AI, I can download a pre-created pack that uses either ClearML or run:ai. No more searching or guessing where I got the packs needed.

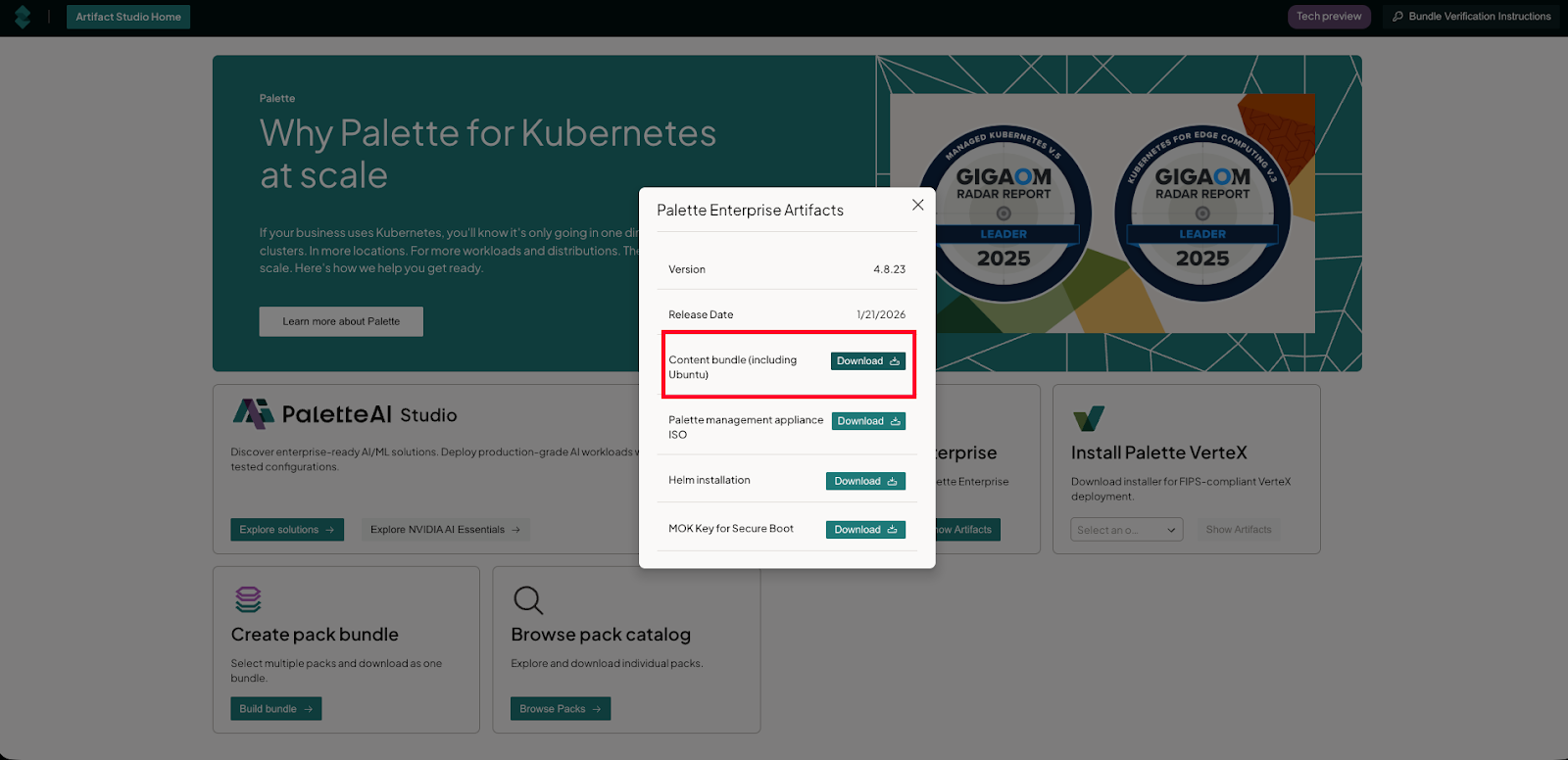

For example, if I wanted to install the Palette management plane as an appliance, I would have to configure a Linux x64 system (most likely Ubuntu). This would mean locking it down and securing it. That process alone could take a full day or more. Instead, I can go to Artifact Studio and download all the components I need in the format I prefer: whether I want a content bundle with Ubuntu, a bootable iso of the whole management appliance, or Helm charts.

Why secure artifact sharing matters

Whether a platform is customer-facing or not, artifact distribution becomes a security boundary, regardless of who it impacts. Every binary, image, or package you provide is intended for deployment into an environment, often with elevated privileges. That means you need to know not only that the software works, but that it is authentic and unchanged. From a security and compliance perspective, “we think that’s the right file” is never an acceptable answer.

This becomes especially important when audits, security reviews, authority to operate (ATO), or incident investigations occur. So, let’s say an admin reports an issue in the production environment of a particular piece of software. You ask them for the checksum of the artifact, and lo and behold, it doesn’t match what engineering expects.

Now the questions start: Was the artifact rebuilt? Was it downloaded from an old link? Did someone share a patched version over email to “help unblock” a deployment months ago? It is the age-old challenge of computers: humans getting involved. Humans often introduce challenges, intentionally or unintentionally, due to a lack of control and visibility, which can turn what should be a routine support case into a potential security incident.

One of Artifact Studio’s main features: secure artifact sharing. This simple concept inherently reduces this kind of uncertainty. By distributing artifacts through a controlled system, it can ensure that DevOps teams only access approved, validated binaries. Every artifact has a known origin, a clear version, and a defined lifecycle.

For organizations that need to support airgapped, higher-security, or regulatory environments, creating an internal registry of tested and verified packs is critical. Trying to find those packs and ensure you have the right ones can be a challenge. Artifact Studio's main purpose is to simplify the process. It enables filtering by hardware type, FIPS certification status, whether it’s for Palette VerteX or Palette Enterprise, and supported releases.

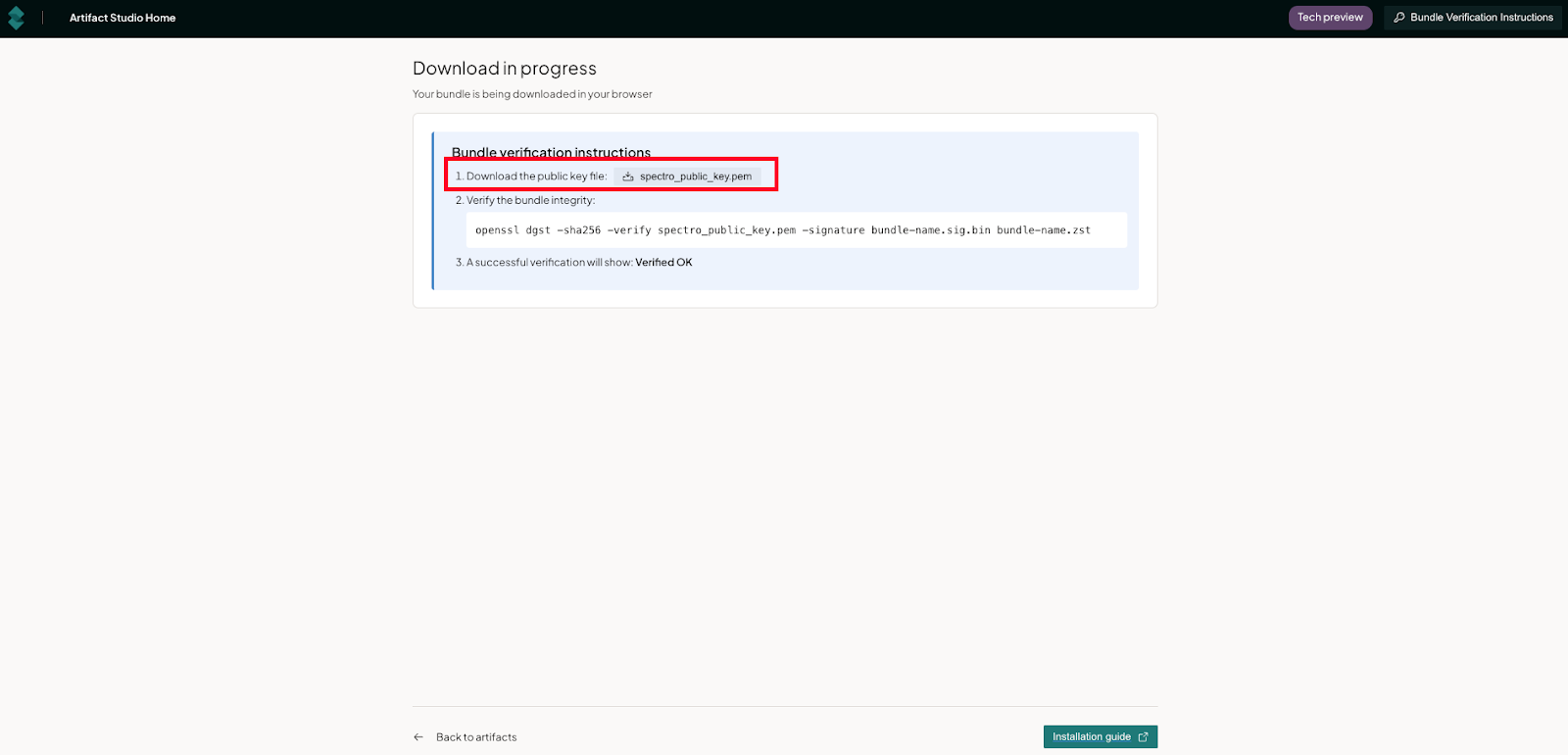

When you download a bundle, an individual pack or an ISO, the download includes the sig.bin file. This file can be verified against the spectro_public_key.pem file. You can validate a single file or use the guidance in the documentation to validate multiple files.

This process helps Artifact Studio simplify compliance and security. So instead of reconstructing history after an issue, you can point to an authoritative source of truth: what was released, when it was released, and who it was intended for. This gives you an easy way to ensure that the various teams have trusted access to the resources needed.

The idea of secure artifact sharing isn’t about locking things down. It’s about making it easier to find needed artifacts, minimizing risk and preserving trust even when things don’t go as planned.

Conclusion

The tools have changed since the days of floppy disks and scratched CD-ROMs, but the core challenge remains the same: getting the right software to the right place, reliably and with confidence. In today’s complex Kubernetes platforms, the challenge is amplified by scale, security expectations, and the need for ongoing guidance. Artifact Studio addresses this by bringing clarity and intention to artifact sharing into a single, cohesive approach. And this first step of delivering a reliable source of truth for software helps to rebuild the trust well after the first download.

If this blog has piqued your interest and you want to try Palette and Artifact Studio, contact us for a quick 1:1 demo.

.avif)