How to provision bare metal K8s clusters with Cluster API and Canonical MaaS (…and still manage them like just any other K8s cluster!)

With app modernization in full swing, enterprises across the board are building cloud native applications and orchestrating them using Kubernetes (K8s). With more apps now than ever before running in containers (and a growing percentage of them part in production), IT and dev teams are looking for further ways of optimizing to gain efficiencies. One of them comes in the shape of running apps and the K8s platform directly on bare metal servers. The upside? Better performance and of course less cost (say goodbye to hypervisor licenses) and effort (one layer less to manage). Indeed, K8s has everything we need to run our apps directly on top of bare metal machines. The downside? Until now it has been generally quite challenging to install, run and manage bare metal K8s clusters, viewed often as a “disconnected” experience between the underlying server and the K8s nodes, let alone treating them as just another “location” of a multi-environment K8s management strategy. But what if there was a way to unify the experience and treat a bare metal server like any other cluster? Now there is and in this blog, we’ll look at how two open source technologies (and 1 project) can give us all we need to deploy and manage bare metal K8s clusters in minutes!

This blog reflects the contribution of Spectro Cloud to the Cluster API CNCF project and the recent release of the Cluster API MaaS Provider.

The challenge is really the Operating System!

The challenge with running and managing bare metal K8s clusters today is that servers and operating systems are managed separately from the K8s nodes’ lifecycle. Typically the machines are pre-provisioned with an Operating System (OS), and only then the K8s components are initialized into the running system (using kubeadm, kops, or other K8s orchestration tools). Having the physical server and its OS not part of your K8s management severely handicaps lifecycle operations: no support for automatic resiliency and scaling, and potentially reduced cluster and app availability with upgrades being done with the outdated “inline” process. For smaller deployments and edge use cases, this may work well enough, since we’re just not dealing with enough scale and a one-off “pets” approach is not the end of the world. But when it comes to having to run and manage many bare metal machines in a data center or with a provider (e.g. for AI/ML large-scale processing), the “unique” care the server and OS need from you, becomes a significant headache, risk and at the end of the day, cost.

Most organizations today run K8s on top of a hypervisor exactly to simplify the experience of managing the node and K8s altogether. The benefits are clear: we get consistency in the nodes and configuration, while also basic operations such as cluster scaling, allowing rolling upgrades, and potentially even automatic healing of failed nodes by replacing them with healthy ones. What if we could get these exact same capabilities running bare metal K8s clusters, and also gain the efficiencies of running applications on bare metal? Namely increased performance, and reducing the cost and operation complexity a hypervisor adds.

The solution: Bridging Cluster API and Canonical “Metal as a Service” (MaaS)

Let’s take a look at the ingredients before we dive in:

- One of the most popular K8s sub-project for declarative lifecycle management is Cluster API. Cluster API is a project that brings K8s-style APIs and an “end-state” approach to cluster lifecycle management (creation, configuration, upgrade, destruction). It’s designed to work across various data center and cloud environments. Cluster API’s adoption has sky-rocketed over the years, with major modern container and K8s management platforms supporting it - Google Cloud’s Anthos, VMware’s Tanzu, and our own Spectro Cloud offering. The Cluster API’s declarative approach makes it easy to consistently manage many diverse clusters and scale as required. Although Cluster API of course is a great tool to declaratively manage K8s environments, on its own, it doesn’t solve our problem as until now there was no way to use it with bare metal servers.

- This is where Canonical MaaS comes in. For bare metal server lifecycle management, and while there are several different interfaces and platforms (Metal3, Tinkerbell, Ironic, etc.) perhaps the most popular one and mature (in development for almost ten years) is Canonical MaaS allows bare metal services to be managed via a rich set of APIs similar to an IaaS cloud; everything from allocating machines, configure networking, deploying an Operating System, etc. What makes MaaS appealing is its wide range of support for different types of hardware vendors from Cisco, Dell, HP, and most other vendors through IPMI or Redfish interfaces.

To support provisioning across various environments (clouds, data centers, edge locations) Cluster API provides an abstraction layer – where the implementation for each environment is implemented in a separate “provider” component. This “provider” component is the bridge between the Cluster API abstractions (Cluster, Machine, etc) and the underlying environment. For instance, when given a Cluster/AWSCluster resource, Cluster API with the “AWS” provider component will manage the infrastructure on AWS (managing the lifecycle of the VPCs, Subnets, EC2 instances, LoadBalancers, etc).

In other words, in order for someone to use Canonical MaaS as an interface to present bare metal servers to be managed with Cluster API, a provider needed to be developed. With more of our customers exploring the option of running bare metal K8s, this is an area that we could not ignore, and our team here at Spectro Cloud is happy to announce the release of the first Cluster API MaaS provider (“cluster-api-provider-maas”) and make bare metal K8s easy for anyone.

This provider uses Canonical MaaS to provision, allocate and deploy machines, configure machine networking, and manage DNS mappings for all control plane nodes. Once the user creates the corresponding Cluster API CRDs for Cluster/MaasCluster, the Cluster API MaaS provider will programmatically allocate and deploy bare metal machines registered with Canonical MaaS. MaaS will power-on and PXE boot installs the designated operating system onto the machines. Once the machines are powered on, Cluster API will initialize the K8s control-plane nodes and then join the worker node machines into the K8s cluster. All of the Cluster API capabilities are implemented in the provider, such as provisioning multi-master K8s conformant clusters, implementation of security best practices, rolling upgrade support, etc.

Essentially, this means that the lifecycle of the bare metal infrastructure can now (finally) be tied to the overall Kubernetes environment’s lifecycle management.

“Demo” time

In the section below, we'll walk you through the step-by-step instructions for provisioning your very own bare metal K8s cluster. We’ll cover the steps to set up Canonical MaaS, setting up the Cluster API framework, and then of course provisioning the K8s cluster. So let’s get started!

1. Get that Canonical MaaS running

Before we can provision bare metal K8s clusters using Cluster API, we need to have Canonical MaaS configured with some machines ready to go. If you haven’t already, please follow the MaaS guide for setting up MaaS on Ubuntu: https://maas.io/docs/snap/3.0/ui/maas-installation.

We’d recommend that you have at least 7 bare metal machines available in the MaaS interface (3 for CP, 3 for workers, and 1 reserved for upgrades). However, if you need to get by on the minimum required, aim for 2 available machines (1 for CP, 1 for worker).

NOTE: MaaS can manage any number of hardware vendors using their BMC / IPMI capabilities. If you’re just following along, and need a simple way to test – you can even launch VMs on vSphere (or even VirtualBox) and have MaaS manage the lifecycle.

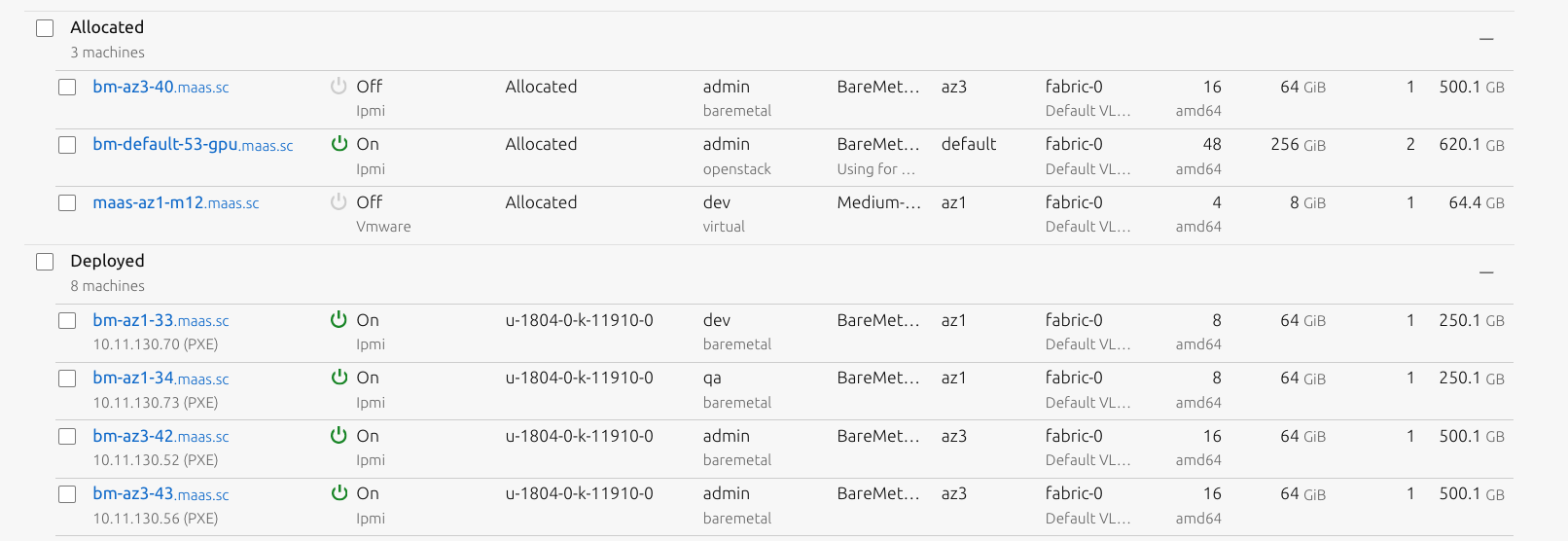

Figure 2: MaaS machines in an allocated and deployed state

As part of the setup in the next step, we’ll need to get the IP address and an API key for MaaS. The user key can be found in your profile settings!

Finally, we’ll upload an operating system image that will be used for the control plane and worker nodes. Please revert to this page for instructions on uploading images to Canonical MaaS: https://github.com/spectrocloud/cluster-api-provider-maas/tree/main/image-generation. If you don’t have a custom K8s-ready image, no worries - Spectro Cloud releases security-hardened images available for anyone to use.

2. Setting up a management cluster using Kind

Cluster API uses K8s as the orchestrator to manage other K8s clusters. We will first set up a **Management Cluster **using Kind. If you already have a K8s cluster available, feel free to skip this step.

If you’re looking for a quick solution to run K8s on your workstation, take a look at CNCF Kind clusters and follow their quick guide to get your cluster up and running.

Make sure the cluster is healthy and that all pods are Running:

Great, now you have your Management Cluster running! This will be the control plane for launching the bare metal K8s clusters.

3. Installing Cluster API

In the next few steps, we install the Cluster API orchestrator components.

Start by downloading

utility by following the instructions here for your operating system: https://release-0-3.cluster-api.sigs.k8s.io/user/quick-start.html#install-clusterctl. Make sure to download version 0.3!

Run the following to install the base Cluster API:

Next, install the latest version the *cluster-api-provider-maas *provider:

In the next snippet, make sure to replace the *MAAS_ENDPOINT *and _MAASAPIKEY_ with MaaS Endpoint and ****MaaS API Key, respectively, from the previous MaaS prerequisites section:

And restart the MaaS provider pod:

4. Launching the first bare metal cluster!

Time to launch the first bare-metal cluster. Start by saving the following file to your local directory:

To work with your MaaS environment, the following parameters may need to be modified:

- MaasCluster.spec.dnsDomain - the MaaS DNS domain to attach

- MaasMachineTemplate.template.spec - in addition to minCPU, minMemory, there are other properties like limiting machines to a specific resource pool or zone.

- MaasMachineTemplate.template.spec.image - the image to use, e.g: u-1804-0-k-11913-0.

And then to start provisioning the cluster:

Cluster API will begin provisioning of the cluster. We can monitor the state of the provisioning using:

Note: Ignore the faulty NodeHealthy condition, which usually indicates the Network isn’t ready because of missing CNI.

If there are any errors during provisioning, describe the corresponding Machine or MaasMachine object:

Once the cluster is fully provisioned, download the workload cluster’s kubeconfig:

Install a CNI, like Cilium:

Congrats! You now have a fully-provisioned bare metal K8s cluster.

Now that you are treating your bare metal clusters just like any other cluster in your K8s environment, there are a number of other interesting things you can try:

- Deploy an app - keep it simple with

- How about scaling the workers from 3 to 6?

- Upgrades are as simple as changing the image version to a newer one, e.g: u-18-k-12101.

- Want to see resiliency in action? Try killing one of the nodes - “yank” the power cable of the BM server (or just power off the machine) and see what happens.

The new Cluster API MaaS provider allows organizations to be able to easily deploy, run and manage K8s clusters directly on top of bare metal servers, increasing performance and minimizing cost and operational effort. At the same time, Cluster API brings a modern, declarative approach to Kubernetes cluster lifecycle management, eliminating the need for heavy scripting and making it easier to unify the experience of provisioning and managing K8s nodes - now including bare metal servers!

Thank you for reading and don’t forget to sign up for our webinar next week where we will be demonstrating live how to set up the Cluster API provider for MaaS. In the meantime, if you have any questions about our provider or need help implementing it, please do reach out to @SaaMalik or email me at saad@spectrocloud.com.

Originally published at thenewstack.io

.svg)

.webp)

.svg)

.webp)